i

FOREWORD

Earned Value Management (EVM) is a widely accepted industry best practice for program management

that is used across the Department of Defense (DoD), the Federal government, and the commercial sector.

Government and industry program managers use EVM as a program management tool to provide

situational awareness of program status and to assess the cost, schedule, and technical performance of

programs. EVM is meant to be flexible and mirror the management practices of the contractor, not to

impose burdensome requirements. Whenever possible, the Government should tailor management and

EVM requirements to leverage the contractor’s existing processes and data generated by those processes

to obtain sufficient insight into program cost, schedule, and technical performance. An EVM System

(EVMS) is the management control system that integrates a program’s work scope, schedule, and cost

parameters for optimum program planning and control.

To be effective, EVM practices and competencies must be integrated into the program manager’s

acquisition decision-making process. In addition, the data provided by the EVMS must be timely, accurate,

reliable, and auditable. Finally, the EVMS must be implemented in a disciplined manner consistent with

the 32 Guidelines contained in the Electronic Industries Alliance Standard-748 EVMS (EIA-748) (hereafter

referred to as the “Guidelines”).

The Guidelines represent characteristics and objectives of a management and control system for organizing,

planning, scheduling, budgeting, performance measurement, forecasting, analysis, and baseline change

control. As such, the guidelines are interrelated and foundational in the design, implementation, and

operation of an EVMS. Therefore, a supplier has the opportunity to design a management and control

system with the flexibility of applying these guidelines in a manner that uniquely meets the organization’s

needs in procedural guidance and implementation.

Part 1 of the Earned Value Management Implementation Guide (EVMIG) (hereafter referred to as “this

guide”) describes EVM Concepts and Guidelines. Part 2 provides guidance for Government use of EVM,

including guidance for applying EVM requirements to contracts, an introduction to analyzing performance,

and a discussion of baseline review and maintenance and other post award activities. The appendices

contain additional reference material.

Note that DoD EVM policy applies to contracts with industry, as well as to intra-government activities.

Throughout this document, the term “contract” refers to both contracts with private industry and

agreements with intra-governmental activities that meet the DoD reporting thresholds. Similarly, the term

“contractor” refers to entities within both private industry and government.

This document is intended to serve as the central EVM guidance document for DoD personnel. Throughout

the Earned Value Management Implementation Guide (EVMIG), references are made to additional sources

of information such as EVMS standards, handbooks, guidebooks, and websites. Consult these additional

sources as appropriate (reference Appendix A for a list of these documents and hyperlinks to these

resources).

DoD EVMIG

ii

FOREWORD ........................................................................................................................ i

PART 1: EARNED VALUE MANAGEMENT CONCEPTS & GUIDELINES ................... 1

SECTION 1.1: EARNED VALUE MANAGEMENT .......................................................... 1

1.1.1 Concepts of Earned Value Management ............................................................................. 1

1.1.2 EVM and Management Needs ............................................................................................. 1

1.1.3 Uniform Guidance ............................................................................................................... 1

SECTION 1.2: EARNED VALUE MANAGEMENT SYSTEM GUIDELINES .................. 2

1.2.1 Earned Value Management System (EVMS) ...................................................................... 2

1.2.2 EVMS Guidelines Concept ................................................................................................. 2

1.2.3 System Compliance and Acceptance ................................................................................... 3

1.2.4 System Documentation ........................................................................................................ 3

1.2.5 Cost Impacts ........................................................................................................................ 4

1.2.6 Conclusion ........................................................................................................................... 4

PART 2: PROCEDURES FOR GOVERNMENT USE OF EARNED VALUE ................... 4

SECTION 2.1: APPLYING EARNED VALUE MANAGEMENT ...................................... 4

2.1.1 Overview ............................................................................................................................. 4

2.1.2 Government EVM Organizations ........................................................................................ 5

2.1.3 Roles and Responsibilities ................................................................................................... 5

SECTION 2.2: PRE-CONTRACT ACTIVITIES ................................................................ 7

2.2.1 Overview ............................................................................................................................. 7

2.2.2 Department of Defense Requirements ................................................................................. 7

2.2.3 General Guidance for Program Managers ......................................................................... 17

2.2.4 Acquisition Strategy/Acquisition Plan .............................................................................. 17

2.2.5 Preparation of the Solicitation ........................................................................................... 17

2.2.6 Source Selection Evaluation .............................................................................................. 36

2.2.7 Preparation of the Contract ................................................................................................ 38

SECTION 2.3: POST-AWARD ACTIVITIES – INTEGRATED BASELINE REVIEWS 38

2.3.1 Overview ........................................................................................................................... 38

2.3.2 Purpose of the IBR ............................................................................................................ 38

2.3.3 IBR Policy and Guidance .................................................................................................. 39

2.3.4 IBR Focus .......................................................................................................................... 40

2.3.5 IBR Team .......................................................................................................................... 41

2.3.6 IBR Process ....................................................................................................................... 41

2.3.7 IBR Results ........................................................................................................................ 44

SECTION 2.4: POST-AWARD ACTIVITIES – SYSTEM COMPLIANCE ..................... 45

2.4.1 Overview ........................................................................................................................... 45

2.4.2 EVMS Approval ................................................................................................................ 45

2.4.3 EVMS Surveillance and Maintenance ............................................................................... 49

2.4.4 System Changes ................................................................................................................. 53

2.4.5 Reviews for Cause (RFCs) ................................................................................................ 54

2.4.6 Deficiencies in Approved EVMS ...................................................................................... 56

2.4.7 System Disapproval ........................................................................................................... 57

2.4.8 Deficiencies in Disapproved or Not Evaluated Systems ................................................... 57

SECTION 2.5: OTHER POST-AWARD ACTIVITIES ..................................................... 58

2.5.1 Overview ........................................................................................................................... 58

DoD EVMIG

iii

2.5.2 Maintaining a Healthy Performance Measurement Baseline (PMB) ................................ 58

2.5.3 EVMS and Award Fee Contracts ...................................................................................... 63

2.5.4 Performance Data .............................................................................................................. 64

2.5.5 EVM Training ................................................................................................................... 67

2.5.6 Adjusting Level of Reporting During Contract Execution ................................................ 67

APPENDIX A: EVM GUIDANCE RESOURCE ROADMAP .......................................... 71

APPENDIX B: GUIDELINES-PROCESS ......................................................................... 74

APPENDIX C: ESSENTIAL ELEMENTS OF A BUSINESS CASE ANALYSIS ............. 75

APPENDIX D: SAMPLE AWARD FEE CRITERIA ........................................................ 76

APPENDIX E: SAMPLE CONTRACT DATA REQUIREMENTS LIST FORMS ........... 81

APPENDIX F: SAMPLE STATEMENT OF WORK PARAGRAPHS ............................. 87

APPENDIX G: GLOSSARY OF TERMS .......................................................................... 91

DoD EVMIG

1

PART 1: EARNED VALUE MANAGEMENT CONCEPTS & GUIDELINES

SECTION 1.1: EARNED VALUE MANAGEMENT

1.1.1 Concepts of Earned Value Management

Earned Value Management (EVM) is a program management technique for measuring program

performance and progress in an objective manner. It integrates the technical, cost, and schedule objectives

of a contract to facilitate risk identification and mitigation. During the planning phase, a Performance

Measurement Baseline (PMB) is developed by time phasing budget resources for defined work. As work

is performed and measured against the PMB, the corresponding budget value is “earned.” From this

Earned Value (EV) metric, Cost Variances and Schedule Variances may be determined and analyzed.

From these basic variance measurements, the Program Manager (PM) can identify significant drivers,

forecast future cost and schedule performance, and construct corrective action plans as necessary to

improve program performance. EVM therefore incorporates both performance measurement (i.e., what is

the program status and when will the effort complete) and performance management (i.e., what we can do

about it). EVM provides significant benefits to both the government and the contractor.

1.1.2 EVM and Management Needs

Insight into the contractor’s performance (specifically program management and control) is a fundamental

requirement for managing any major acquisition program. Contractor cost and schedule performance data

must:

• Relate time-phased budgets to specific contract tasks and/or Statements of Work (SOWs)

• Objectively measure work progress

• Properly relate cost, schedule, and technical accomplishments

• Enable informed decision making and corrective action

• Be timely, accurate, reliable, and auditable

• Allow for estimation of future costs and schedule impacts

• Supply managers at all levels with status information at the appropriate level

• Be derived from the same Earned Value Management System (EVMS) used by the contractor to

manage the contract

• Integrate subcontract EVMS data into Prime Contractor’s EVMS

1.1.3 Uniform Guidance

This document provides uniform guidance for Department of Defense (DoD) PMs and other stakeholders

responsible for implementing EVM. It also provides a consistent approach to applying EVM based on the

particular needs of the program that is both cost effective and sufficient for integrated program

management. Application of this guide across all DoD acquisition commands should result in improved

program performance and greater consistency in program management practices throughout the contractor

community.

DoD EVMIG

2

SECTION 1.2: EARNED VALUE MANAGEMENT SYSTEM GUIDELINES

1.2.1 Earned Value Management System (EVMS)

An integrated management system and its related sub-systems, an EVMS allows for the following:

• Planning all work scope for the program from inception to completion

• Assignment of authority and responsibility at the work performance level

• Integration of the cost, schedule, and technical aspects of the work into a detailed baseline plan

• Objective measurement of progress at the work performance level with EVM metrics

• Accumulation and assignment of actual direct and indirect costs

• Analysis of variances or deviations from plans

• Summarization and reporting of performance data to higher levels of management for action

• Forecast of achievement of Milestones and completion of contract events

• Estimation of final contract costs

• Disciplined baseline maintenance and incorporation of baseline revisions in a timely manner

Private companies utilize business planning and control systems for management purposes. Tailored,

adapted, or developed for the unique needs of companies, these planning and control systems rely on

software packages and other Information Technology solutions. While most of the basic principles of an

EVMS are already inherent in good business practices and program management, nonetheless there are

unique EVM guidelines that require a more disciplined approach to the integration of management

systems.

1.2.2 EVMS Guidelines Concept

EVM is based on the premise that the government cannot impose a single integrated management system

solution for all contractors. The Guideline approach recognizes that no single EVMS meets every

management need for all companies. Due to variations in organizations, products, and working

relationships, it is not prudent to prescribe a universal system. Accordingly, the Guidelines approach

establishes a framework within which an adequate integrated cost/schedule/technical management system

fits. The EVMS Guidelines describe the desired outcomes of integrated performance management across

five broad categories of activity: Organization; Planning, Scheduling, and Budgeting; Accounting

Considerations; Analysis and Management Reports; and Revisions and Data Maintenance. Please

reference Appendix B for the Guidelines – Process Matrix.

While the Guidelines are broad enough to allow for common sense application, they are specific enough

to ensure reliable performance data for the buying activity. The Guidelines do not address all of a

contractor's needs for day-to-day or week-to-week internal controls such as subcontractor status reports.

These important management tools should augment the EVMS as effective elements of program

management.

The Guidelines have been published as the Electronic Industries Alliance (EIA) standard EIA-748, Earned

Value Management Systems. The DoD only recognizes the Guideline statements within the EIA-748 and

periodically reviews the Guidelines to ensure they continue to meet the government’s needs.

The Guidelines provide a consistent basis to assist the government and the contractor in implementing and

maintaining an acceptable EVMS. The DoD Earned Value Management System Interpretation Guide

DoD EVMIG

3

(EVMSIG) provides the overarching DoD interpretation of the Guidelines where an EVMS requirement

is applied.

The Guideline approach provides contractors the flexibility to develop and implement effective

management systems while nonetheless ensuring performance information is provided to management in

a consistent manner.

1.2.3 System Compliance and Acceptance

An EVMS that meets the “letter of the law” (i.e., the Guidelines) while failing to meet the intent of the

Guidelines does not support management's needs.

It is the contractor’s responsibility to develop and apply the specific procedures for complying with the

Guidelines. Current DoD policy (Department of Defense Instruction (DoDI) 5000.02 Table 8), EVM

Requirements, requires contracts that meet certain thresholds use an EVMS that complies with the

Guidelines standard. DoDI 5000.02 also requires the proposed EVMS to be subject to system acceptance

under certain conditions (see Section 2.2 for information on thresholds for compliance and Section 2.3 for

system acceptance). When the contractor’s system does not meet the intent of the Guidelines, the

contractor must make adjustments necessary to achieve system acceptance.

When the government’s solicitation package specifies compliance with the Guidelines and system

acceptance, an element of the evaluation of proposals is the prospective contractor's proposed EVMS. The

prospective contractor should describe the proposed EVMS in sufficient detail to permit evaluation for

validation with the Guidelines. Section 2.2, Pre-Contract Activities includes a discussion of both

government and contractor activities during the period prior to contract award. Refer to the applicable

Defense Federal Acquisition Regulation Supplement (DFARS) clauses for specific EVMS acceptance and

compliance requirements for the contract.

1.2.4 System Documentation

EVMS documentation should be established in accordance with systems documentation and

communication of policies and procedures of the affected organization. Additional guidance for

companies is contained in Section 4 of the EIA-748. Section 2.2.6.2 of this Guide discusses documentation

guidance for contracts that require EVMS compliance.

Upon award of the contract, the contractor utilizes the EVMS process description and documentation to

plan and control the contract work. As the government relies on the contractor’s system, it should not

impose duplicative planning and control systems. Contractors are encouraged to maintain and improve

the essential elements and disciplines of the systems and should coordinate system changes with the

government. The Administrative Contracting Officer (ACO) approves system changes in advance for

contracts that meet the threshold for the Guidelines compliance and system acceptance. Refer to DFARS

Subpart 234.2 Earned Value Management System and Paragraph 2.2.6.2.1 of this Guide for more

information on this requirement.

The government PM and EVM analysts are encouraged to obtain copies of the contractor’s System

Description and related documentation and to become familiar with the company’s EVMS. Companies

usually provide training on their systems upon request, enabling the government team to understand how

company processes generate EVMS data, the impacts of EV measurement methodology, and the

DoD EVMIG

4

requirements for government approval of changes. Government EVMS specialists should have the latest

System Description and related documentation and familiarize themselves with the company’s EVMS

before beginning surveillance activities.

1.2.5 Cost Impacts

The cost of implementing EVMS is considered part of normal management costs. However, improper

implementation and maintenance create an unnecessary financial burden on both the contractor and the

government. Contractors are encouraged to establish and maintain innovative and cost effective processes

with continuous improvement efforts. Typical areas where costs could be mitigated include selection of

the proper levels for management and reporting, the requirements for variance analysis, and the

implementation of effective surveillance activities (see Part 2 of this guide for information on applying

data items and constructing an effective surveillance plan).

The government and contractor should discuss differences arising from divergent needs (such as the level

of reporting detail) during contract negotiations. While the Guidelines are not subject to negotiation, many

problems concerning timing of EVMS implementation and related reporting requirements are avoided or

minimized through negotiation. The contractor often uses the Work Breakdown Structure (WBS) and

contract data requirements defined in the Request for Proposal (RFP) to establish its planning, scheduling,

budgeting, and management infrastructure, including the establishment of Control Accounts (CAs), Work

Packages (WPs), and charge numbers. The Government should seriously consider the WBS and reporting

levels prior to RFP and during negotiations with the contractor. Decisions made prior to RFP have direct

impact on the resources employed by the contractor in the implementation of the EVMS and data available

to the government through the Integrated Program Management Report (IPMR). The government and

contractor should also periodically review processes and data reporting to ensure that the tailored EVMS

approach continues to provide the appropriate level of performance information to management.

1.2.6 Conclusion

Application of the EVMS Guidelines helps to ensure that contractors have adequate management systems

that integrate cost, schedule, and technical performance. This also provides better overall planning,

control, and disciplined management of government contracts. An EVMS compliant with the Guidelines

and properly used helps to ensure that valid cost, schedule, and technical performance information are

generated, providing the PM with an effective decision making tool.

PART 2: PROCEDURES FOR GOVERNMENT USE OF EARNED VALUE

SECTION 2.1: APPLYING EARNED VALUE MANAGEMENT

2.1.1 Overview

The intent of this guide is to improve the consistency of EVM application across DoD and within industry.

When PMs use EVM in its proper context as a tool to integrate and manage program performance, the

underlying EVMS and processes become self-regulating and self-correcting. PMs should lead this effort,

as the success of the program depends heavily on the degree to which the PM embraces EVM and utilizes

it on a daily basis.

Government PMs recognize the importance of assigning responsibility for integrated performance to the

Integrated Product Teams (IPTs). While PMs and IPTs are ultimately responsible for managing program

performance, EV analysts should assist them in preparing, coordinating, and integrating analysis.

DoD EVMIG

5

Cooperation, teamwork, and leadership by the PM are paramount for successful implementation and

utilization. There are different support organizations that assist the program team with tailoring and

implementing effective EVM on a program. This section of the guide defines the roles and responsibilities

of the various organizations, offices, and agencies within the DoD.

2.1.2 Government EVM Organizations

Many organizations depend on contractor-prepared and submitted EV information. It is important to

acknowledge, recognize, and balance the needs of each organization. Those organizations include but are

not limited to Acquisition Analytics and Policy (AAP), Defense Contract Management Agency (DCMA),

Component EVM focal points, Systems Command EVM organizations, Service Acquisition

organizations, procuring activities, Contract Management Offices (CMOs), and program offices.

2.1.3 Roles and Responsibilities

2.1.3.1 Acquisition Analytics and Policy (AAP)

AAP is accountable for EVM policy, oversight, competency, and governance across the DoD. One of

AAP’s goals is to increase EV’s constructive attributes for the DoD firms managing acquisition programs

by reducing the economic burden of inefficient implementation of EVM. AAP is dedicated to the idea that

EVM is an essential integrated program management tool and not merely a contractually required report.

AAP has formal cognizance over the EVMSIG, which is the basis for DoD’s assessment of contractor

EVMS compliance to the Guidelines.

2.1.3.1.1 Role of AAP in the Appeal Process

The AAP EVM Interpretation and Issue Resolution (IIR) process provides both industry and government

a vehicle for formally submitting requests to AAP regarding existing DoD EVM policy and guidance. The

process is available for when the requestor’s natural chain of command cannot resolve a particular

question or concern. Generally, the requestor should consult with their Service/Agency EVM focal point

prior to initiating an IIR with AAP. Where appropriate, in order to promote a common understanding and

consistent implementation of DoD EVM policy throughout the EVM community, IIR responses are

available to the public via lessons learned on the interpretation of DoD EVM policy and guidance. Any

information, guidance, or recommended resolutions provided by AAP EVM through the IIR process do

not replace any contractual documents, requirements, or direction from the Contracting Officer (CO) on

a given contract.

2.1.3.2 Defense Contract Management Agency

The DCMA is responsible for ensuring the integrity and effective application of contractor’s EVMS. The

DCMA has the responsibility to determine EVMS compliance (see paragraph 2.4.3.4.1) within the DoD.

To this point, the DCMA works with various government and industry teams to develop practical EVMS

guidance, administer contractual activities, and conduct Compliance Reviews (CRs), ensuring initial and

ongoing compliance with the Guidelines.

2.1.3.3 Component EVM Focal Points

Component focal points coordinate and exchange information on EVM. Component focal points

disseminate current policy, provide advice, ensure effective EVM implementation on new contracts,

analyze contractor performance, facilitate Integrated Baseline Reviews (IBRs), assess risk, and support

surveillance activities to assess the EVMS management processes and the reports the system produces.

DoD EVMIG

6

The Departments of the Air Force, Army, and Navy and the Missile Defense Agency (MDA) all have

component EVM focal points.

2.1.3.3.1 Air Force EVM

Acquisition Integration (SAF/AQXE) is the Air Force focal point for EVM implementation and policy.

Additionally, the Air Force has multiple operating location focal points that provide direct support to

programs at their respective location and/or center. The SAF/AQXE SharePoint site provides up to date

information, points of contact, and Air Force policy and guidance.

2.1.3.3.2 Army EVM

Army Acquisition Reporting and Assessments (ARA) under the Deputy for Acquisition and Systems

Management (DASM) is the focal point for EVM implementation within the Army Acquisition

community.

2.1.3.3.3 Navy EVM

The Deputy Assistant Secretary of the Navy (Management and Budget) (DASN (M&B)) is the focal point

for EVM implementation within the Department of Navy. The Naval Center for Earned Value

Management implements EVM and other practices more effectively and consistently across all

Department of Navy acquisition programs, functioning as the Department of Navy’s central point of

contact and authority for all matters concerning the implementation of EVM.

2.1.3.3.4 Missile Defense Agency (MDA) EVM

The MDA Director for Operations is the designated MDA EVM focal point, acting as the principal advisor

to the MDA Director on all matters relating to implementation and use of EVM. The MDA/EV Director

performs the MDA EVM focal point function. The EV Director, as functional lead for MDA EVM,

provides EVM personnel, support, guidance, and assistance to MDA PMs and their staffs in executing

their EVM responsibilities. The MDA EV Director furnishes senior MDA management with timely and

accurate EVM information upon which to make informed decisions. The MDA EV Director coordinates

with DoD, other Government Agencies, and industry in continuous EVM process improvement.

• Interprets and promulgates EVM policy from the DoD and higher authority and produces MDA

directives and instructions for use by program offices to properly conform with EVM and IBR

policies

• Develops MDA EVM tools and EVM training materials and presents them to the MDA work force.

Responds to MDA management needs in the analysis, formatting, and display of EVM data.

• Through coordination with MDA COs, PMs, and Business/Financial Managers, ensures

incorporation of proper EVM requirements on solicitations and contracts

2.1.3.4 Procuring Activities

The organization tasked with executing the procurement is responsible for implementing EVM on a

contract. These organizations are generally referred to as Procuring Activities. For purposes of this guide,

Procuring Activities are composed of the Program Management Office (PMO), the contracting

organization, and the IPTs that support the PMO. The PMO and the PM help ensure that all solicitations

and contracts contain the correct EVMS and/or Integrated Master Schedule (IMS) requirements, tailored

as appropriate for the specific nature of the program in accordance with DoD policy. The PMO and PM

also have the responsibility to conduct the IBR, perform integrated performance analysis, proactively

manage the program utilizing performance data, and accurately report performance to decision makers.

DoD EVMIG

7

2.1.3.5 Contract Management Offices

CMOs are assigned to administer contractual activities at specific contractor facilities or regional areas in

support of the PMO. Cognizant CMOs are a part of the DCMA and Navy Supervisor of Shipbuilding;

Conversion and Repair (SUPSHIP), and CMOs may designate EVMS specialists. Additional guidance

regarding CMO functions is provided in paragraph 2.4.3.4 of this Guide, DFARS Subpart 242, DCMA

EVMS Compliance Review and Standard Surveillance Instructions and Naval Sea Systems Command

(NAVSEA) Standard Surveillance Operating Procedure. The ACO is authorized to approve a contractor’s

EVMS, which recognizes the contractor’s EVMS is acceptable and has been determined to be in

compliance with the the Guidelines. The ACO is also authorized to withdraw this approval after certain

procedures have been followed, as specified in section 2.4.5 of this Guide.

SECTION 2.2: PRE-CONTRACT ACTIVITIES

2.2.1 Overview

This section provides EVM policy and general guidance for pre-contract activities, including preparation

of the solicitation and contract, conduction of source selection activities, and tailoring of reporting

requirements. The information provided in this section supports the policy contained in DoDI 5000.02,

IPMR DID, DFARS, and MIL-STD-881. It also supports the guidance contained in the Defense

Acquisition Guidebook and the IPMR Implementation Guide.

2.2.2 Department of Defense Requirements

2.2.2.1 Policy

DoD policy mandates EVM for major acquisition contracts that meet the thresholds and criteria contained

in DFARS and DoDI 5000.02, Enclosure 1, Table 8, EVM Requirements (the thresholds are described

below in Paragraph 2.2.2.2 and Figure 1). The term “contracts” includes contracts, subcontracts, intra-

government work agreements, and other agreements. This is mandatory unless waived by the Component

Acquisition Executive (CAE) or designee. This policy also applies to highly sensitive classified programs,

major construction programs, automated information systems, and foreign military sales. In addition, it

applies to contracts where the following circumstances exist: (1) the prime contractor or one or more

subcontractors are a non-US source, (2) contract work is to be performed in government facilities, or (3)

the contract is awarded to a specialized organization such as the Defense Advanced Research Projects

Agency.

2.2.2.2 EVMS Compliance and Reporting Thresholds

Thresholds are in then-year or escalated dollars. When determining the contract value for the purpose of

applying the thresholds, use the total contract value, including planned options placed on contract at the

time of award. The term “contracts and agreements” in the following paragraphs refers to contracts,

subcontracts, intra-government work agreements, and other agreements. For Indefinite Delivery/Indefinite

Quantity (IDIQ) contracts, EVM is applied to the individual task orders or group of related task orders in

accordance with the requirements in Table 8 of Enclosure 1, DoDI 5000.02.

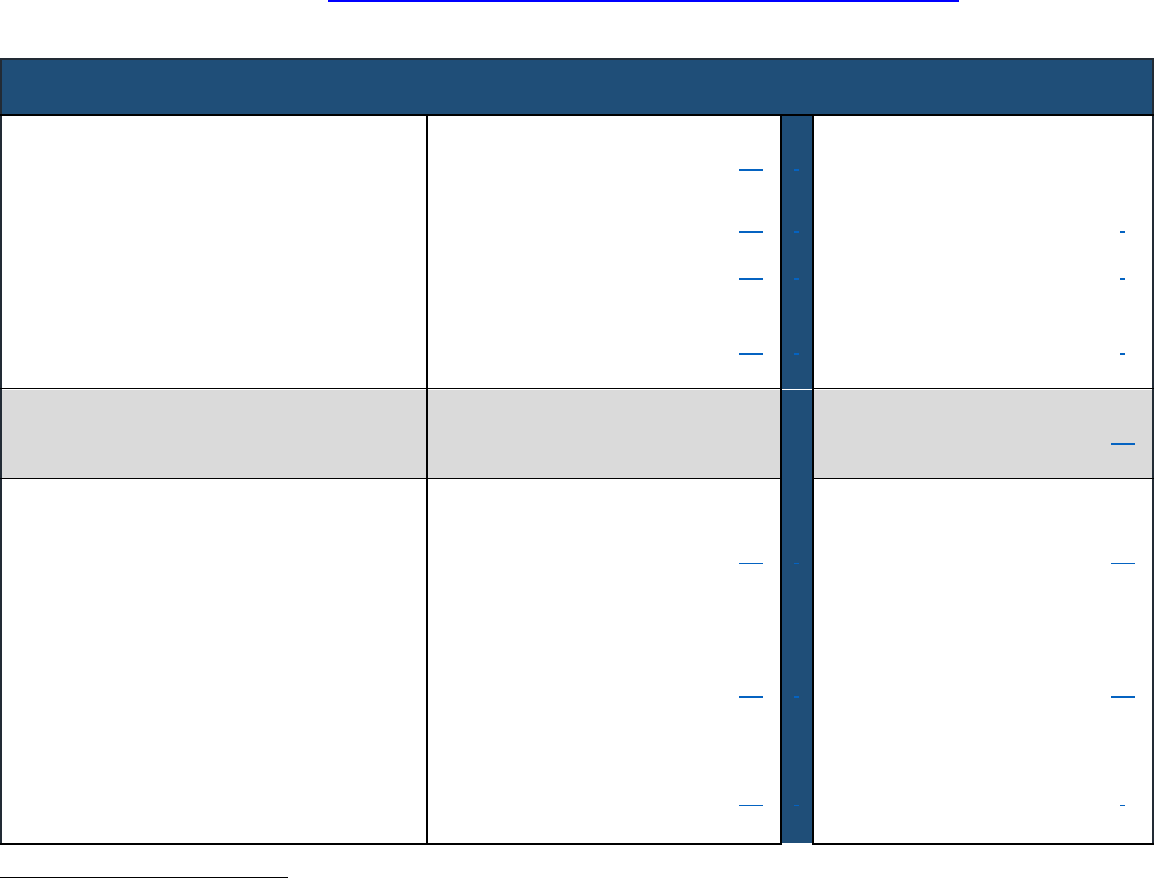

As prescribed in DoDI 5000.02 and DFARS, compliance with the Guidelines is required for DoD cost or

incentive contracts and agreements valued at or greater than $20M. Compliance with the Guidelines and

an EVMS that has been determined to be acceptable by the Cognizant Federal Agency (CFA) are required

for DoD cost reimbursement or incentive contracts and agreements valued at or greater than $100M. If

the contract value is less than $100M, then formal compliance determination of the contractor’s EVMS is

DoD EVMIG

8

not required; however, the contractor needs to maintain compliance with the standard. Contract reporting

requirements are included in Table 9 of the DoDI 5000.02 shown below in Figure 1.

EVM should be a cost-effective system that shares program situational awareness between government

and contractor. In an oversight role, a critical function of the government program office is to use all data,

including cost, schedule, and technical performance metrics, to identify early indicators of problems so

that adjustments can be made to influence future program performance. The decision to apply EVM and

the related EVM reporting requirements should be based on work scope, complexity, and risk, along with

the threshold requirements in the DFARS. Misapplication of EVM can unnecessarily increase costs for

the program.

If the government program office does not believe the full application of EVM would be beneficial, it

should contact its applicable Service/Agency EVM focal point to discuss options so that the program will

still receive the necessary and desired insight into program status. If it is agreed that the full application

of EVM is not necessary, the program office should then request a waiver and/or deviation as required by

their Component policies.

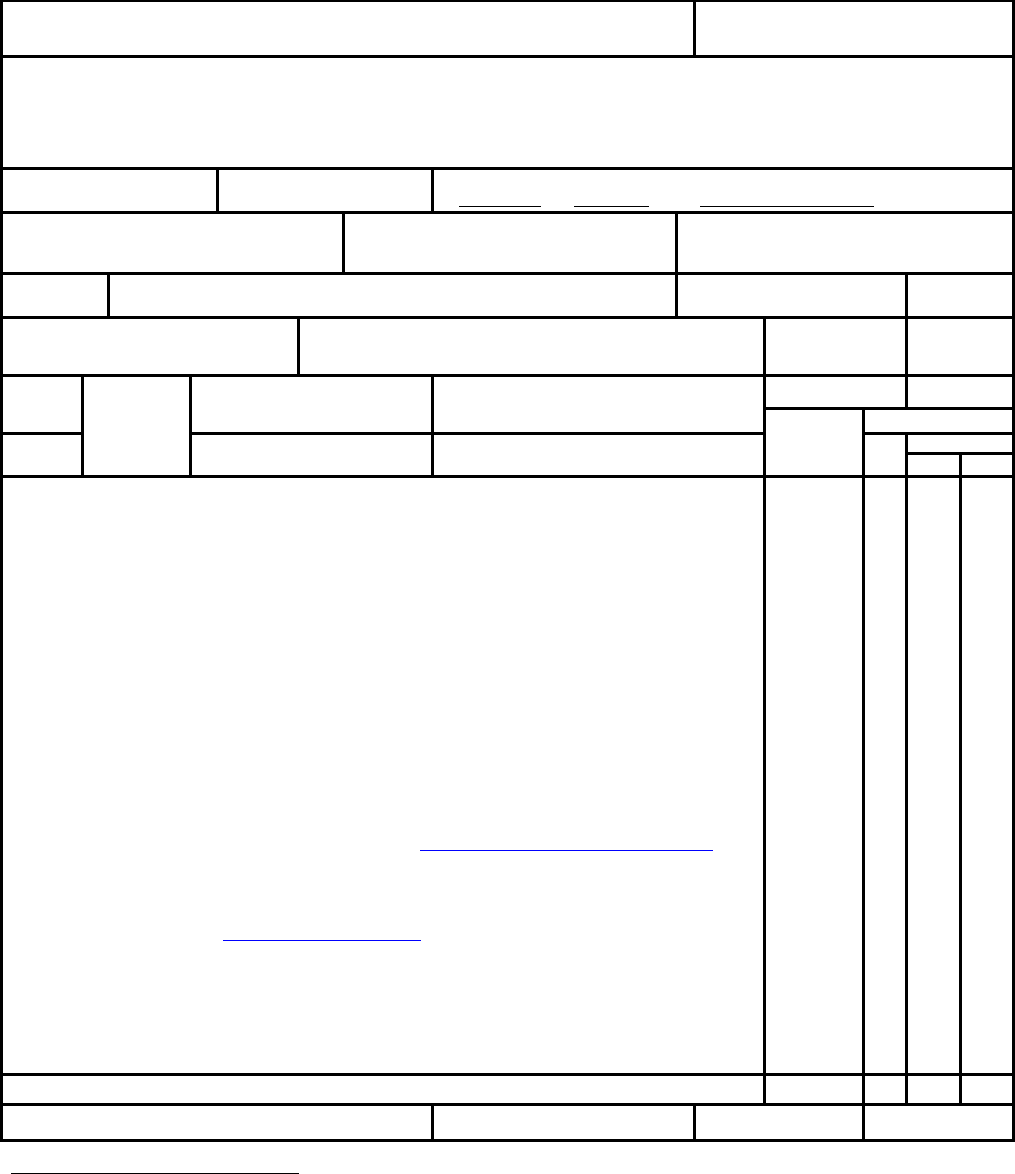

Contract

Value

Applicability

Notes

Source

< $20M

Not required

IPMR should be used if cost and/or

schedule reporting is requested by the

PMO

IPMR DID

DI-MGMT-

81861A

≥ $20M &

< $50M

Required monthly

when EVM

requirement is on

contract

Formats 2, 3, and 4 may be excluded

from the Contract Data Requirements

List (CDRL) at Program Manager

discretion based on risk

≥ $50M

Required monthly

when EVM

requirement is on

contract

All Formats must be included in the

CDRL

Additional Information

For ACAT I contracts, task orders, and delivery orders, IPMR data will be delivered to the EVM

Central Repository.

The IPMR can be tailored to collect cost and/or schedule data for any contract regardless of

whether EVM is required. For information on tailoring the IPMR, refer to the DoD IPMR

Implementation Guide.

Formats and reporting requirements for the IPMR are determined and managed by USD(A&S)

through the office of AAP.

Reporting thresholds are in then-year dollars.

DI-MGMT-81861A = Data Item Management-81861

FIGURE 1: EVM REPORTING REQUIREMENTS

DoD EVMIG

9

2.2.2.3 EVMS Options

2.2.2.3.1 Contracts Less than $20M

The application of EVM is not required on cost or incentive contracts or agreements valued at less than

$20M. The decision to implement EVM on these contracts and agreements is a risk-based decision, at the

discretion of the PM, based on a cost-benefit analysis that compares the program risks versus the cost of

EVM implementation. The purpose of the cost-benefit analysis is to substantiate that the benefits to the

government outweigh the associated costs. It does not require approval above the PM; however, it may be

included in the program Acquisition Strategy (AS) if desired. Factors to consider when making a risk-

based decision to apply EVM on cost or incentive contracts or agreements valued at less than $20M

follow:

• The total contract value including planned options. If the value of a contract is expected to grow

to reach or exceed $20M, the PM should consider applying an EVM requirement on the contract.

• EV implementation. Evaluate the existence and utilization of the contractor’s EVMS as a part of

its routine business practices when considering implementation.

• Type of work and level of reporting available. Developmental or integration work is inherently

risky to the government, and reporting should reflect how programs are managing that risk basis.

• Schedule criticality of the contracted effort to a program’s mission. Items required to support

another program or schedule event may warrant EVM requirements.

2.2.2.3.2 Contracts Less than 18 Months in Duration

EVM implementation for contracts or agreements of less than 18 months in duration including options

may outweigh any benefits received due to the cost and time needed for EVM implementation. An

approved DFARS deviation is not required for contracts or agreements of less than 18 months.

2.2.2.3.3 Non-Schedule-Based Contracts

Consider the application of EVM to contracts that may be categorized as non-schedule-based (i.e., those

that do not ordinarily contain work efforts that are discrete in nature) on a case-by-case basis. Non-

schedule-based contracts include the following:

• Those compensated on the basis of Time and Materials (T&M)

• Services contracts

• Any contracts composed primarily of Level of Effort (LOE) activity, such as program management

support contracts

Non-schedule-based contracts might not permit objective work measurement due to the nature of the work,

most of which cannot be divided into segments that produce tangible and measurable product(s). The

nature of the work associated with the contract is the key factor in determining whether there will be any

appreciable value in obtaining EVM information. Paragraph 2.2.2.8 describes considerations when

determining applicability of work scope.

2.2.2.3.4 Intra-Government Work Agreements

The DoDI 5000.02 requires application of EVM on Intra-Government Work Agreements that meet the

same thresholds as other contracts. While accounting systems used by the government may not have

sufficient controls to comply with the Guidelines, they do not prevent generation of IPMR data.

Government Enterprise Resource Planning (ERP) systems and good scheduling practices enable the

agency to provide reliable performance management data. Recommended reports to place on Intra-

Government Work Agreements include IPMR cost and schedule performance data, staffing data, and

DoD EVMIG

10

variance analysis; Quarterly Schedule Risk Assessment (SRA); Quarterly Contract Funds Status Report

(CFSR); and a Cost and Software Data Report (CSDR) as required.

It is appropriate to not apply the EVM requirement in cases where the nature of the work would not lend

itself to meaningful EVM information. Exemptions from the EVM policy should be the exception, not the

rule, as they are necessary only in cases where a cost or incentive contract is being used for non-schedule-

based work. This type of work is typically accomplished using a Firm Fixed Price (FFP) contract. Program

offices should follow the process to obtain an EVM applicability decision.

The DoDI 5000.02 requires that the appropriate authority dependent upon ACAT level (i.e. AAP,

Component EVM focal points, CAE or designee) review and determine EVM applicability. If EVM is

determined not to apply based on the nature of the work, then EVM is not placed on contract. If EVM is

determined to apply, then EVM is placed on contract in accordance with established thresholds unless a

waiver is obtained. The Services/Agencies have the ability to delegate waiver or deviation authority from

the Federal Acquisition Regulation (FAR) or DFARS. PMs and COs should address waivers and

deviations to their applicable Service/Agency focal point for guidance, documentation requirements, and

processes.

2.2.2.3.5 EVM in Production

EVM methodology and system requirements are applicable to Low-Rate Initial Production (LRIP)

contracts with remaining development or production risk unless the scope of work and risks do not lend

themselves to the application. A tailored IPMR Format 1, 5, 6, and 7 may be used for reporting; Format 1

should address the entire program and include detail for high-risk WBS items.

Application of EVM methodology and system requirements for Full-Rate Production (FRP) contracts are

based on risk and the contractual scope of work. FRP risks are generally low to the government;

subsequently, EVM deviations are requested. If EVM is not applied, program management principles as

well as cost and schedule reporting generally apply. The reporting should include cost information (such

as actuals and top-level schedule information providing delivery dates of end products). Historical data

integrity issues or performance risks may drive additional reporting requirements and/or the application

of EVM.

The EVMS Guidelines provide the basis for determining whether contractors’ management control

systems are acceptable. As management control systems for development and production contracts tend

to differ significantly, it is impossible to provide detailed implementation guidance that specifically

applies to all cases for every contractor. Therefore, users of the guidelines should be alert for areas in

which distinctions in detailed interpretation seem appropriate or reasonable, whether or not they are

specifically identified. Interpretation of the guidelines must be practical as well as sensitive to the overall

requirements for performance measurement. By applying the guidelines instead of specific DoD

prescribed management control systems, contractors have the latitude to meet their unique management

needs. This allows contractors to use existing management control systems or other systems of their

choice, provided they meet the guidelines.

The same EVM reporting requirements in Figure 1 apply to production efforts. However, in more mature

production efforts, the risk associated with the contract is not commensurate with the application of EVM.

DoD EVMIG

11

Programs are encouraged to consult with EVM focal points to determine if a waiver and/or deviation is

an option and to develop alternative program management and reporting strategies and approaches.

2.2.2.3.6 Manufacturing/Enterprise Resource Planning (M/ERP) System

M/ERP systems integrate planning of all aspects (not just production) of a manufacturing firm. They

include functions such as business planning, production planning and scheduling, capacity requirement

planning, job costing, financial management and forecasting, order processing, shop floor control, time

and attendance, performance measurement, and sales and operations planning. Material Requirements

Planning (MRP) and Manufacturing Resource Planning (MRPII) are predecessors of the

Manufacturing/Enterprise Resource Planning (M/ERP) system. The intent of MRP and MRPII was

centralizing and integrating business information in order to facilitate decision making for production line

managers and to increase the efficiency of the overall production line. MRP is concerned primarily with

manufacturing materials, while MRPII is concerned with the coordination of the entire manufacturing

production line, including materials, finance, and human relations. The goal of MRPII is to provide

consistent data to all members in the manufacturing process as the product moves through the production

line.

Government EVM stakeholders recognize the significance of M/ERP systems in program management of

production contracts requiring EVM implementation and compliance. The National Defense Industrial

Association (NDIA) Integrated Program Management Division’s white paper, “Earned Value

Management in a Production Environment” indicates that an “MRP system is one example of a tool used

in production that potentially drives differences in how an EVMS is used or explained versus

development.” Understanding these differences is paramount to confirming compliance with the

Guidelines. M/ERP systems affect the operation and/or process of almost every EVMS applied on

development contracts. Examples include work authorization processes, the way the IMS is used, how

parts are moved both within and between contracts, how supplier or material cost and performance are

recorded, Control Account Manager (CAM) involvement in baseline development and performance

assessment, and WBS level.

As contractors are ultimately responsible for demonstrating compliance with the Guidelines, it is expected

that their EVM System Description and related documentation include language that identifies and

describes in detail areas where EVM processes differ for development and production contracts. In

addition, contractors should explain how each process complies with the Guidelines. Contractors should

refer to the DoD EVMSIG when describing guideline compliance in the differences section of their EVMS

Description. However, there is no requirement for the differences section when contractors elect to have

separate EVMS descriptions for production and development contracts.

2.2.2.3.7 Alternate Acquisition Methods

The same application rules in Figure 1 apply to alternate acquisition methods. With the Department’s

efforts to streamline the acquisition process in order to deliver capabilities faster, alternate acquisition

instruments and methodologies, including Other Transaction Authorities (OTAs) and Middle Tier

Acquisitions (MTAs) have been encouraged. Even with streamlining, programs must still be managed

and Earned Value Management (EVM) should be used where applicable along with other management

tools and processes to provide insight and actionable data to support proactive decision-making on

programs. EVM applies when the work scope warrants it, the dollar value meets the thresholds in the

DFARS, and there is risk to the Government. As described in the OSD Middle Tier of Acquisition Interim

DoD EVMIG

12

Authority and Guidance memo and the DoD Other Transactions Guide for Prototype Projects, OTAs and

MTAs have special considerations, but must still be managed and able to produce information needed for

effective management control of cost, schedule, and technical risk.

2.2.2.4 Contract Growth and Thresholds

Determination of the applicability of EVM is based on the estimated contract value and the expected value

of total Contract Line Item Numbers (CLINs) and options that contain discrete work at the time of award.

For IDIQ contracts, EVM is applied to the individual task orders or groups of related task orders with

discrete work. Most contracts are modified as time progresses; a typical result of these modifications is an

increase in the contract value. In some cases, a contract that was awarded at less than $20M may later

cross the threshold for EVM compliance or a contract awarded for less than $100M may later cross the

threshold for formal system acceptance. Therefore, it is recommended that the increased total contract

value be re-evaluated against the EVM thresholds for a new application of EVM. The PM should evaluate

the total contract value, including planned options and task and delivery orders, and apply the appropriate

EVM requirements based on that total value.

2.2.2.5 Exclusions for Firm Fixed Price (FFP) Contract Type

The application of EVM on FFP contracts and agreements is discouraged, regardless of dollar value. Since

cost exposure is minimized in an FFP environment, the government may elect to receive only the IMS in

order to manage schedule risk. If knowledge by both parties requires access to cost/schedule data due to

program risk, the PM should re-examine the contract type to see if an incentive contract is more

appropriate for the risk.

However, in extraordinary cases where cost/schedule visibility is deemed necessary and the contract type

(e.g., FFP) is determined to be correct, the government PM is required to obtain a waiver for individual

contracts from the MDA. In these cases the PM conducts a Business Case Analysis (BCA) that includes

supporting rationale for EVMS application (see Appendix C: Essential Elements of a Business Case

Analysis for guidance). When appropriate, include the BCA in the acquisition approach section of the

program AS report. In cases where the contractor already has an EVMS in place and plans to use it on the

FFP contract as part of its regular management process, negotiate EVM reporting requirements before

applying an EVM requirement. However, government personnel should not attempt to dissuade

contractors that use EVMS on all contracts irrespective of contract type from their use of EV processes to

manage FFP contracts.

Some factors to consider in applying EVM in an FFP environment follow:

• Effort that is developmental in nature involving a high level of integration

• Complexity of the contracted effort (e.g., state-of-the-art research versus Commercial-Off-the-Shelf

procurement of items already built in large numbers)

• Schedule criticality of the contracted effort to the overall mission of the program (e.g., items required

to support another program or schedule event may warrant EVM requirements)

• Minimized cost risk exposure in an FFP environment (i.e., the government may elect to receive only

the IMS in order to manage schedule risk)

• Nature of the effort (e.g., software intensive effort) is inherently risky

• Contractor performance history as demonstrated by prior contracts with IPMR data or documented

in Contractor Performance Assessment Reports

DoD EVMIG

13

See Paragraph 2.2.5.6.3.4 for guidance on tailoring EVM reporting on FFP contracts.

2.2.2.6 Hybrid Contract Types

Hybrid contracts may require tailored reporting. For example, a contract may be composed of Cost Plus

Incentive Fee (CPIF), FFP, and T&M elements. The following general guidance applies to hybrid contract

types: limit reporting to what can and should be effectively used. In some cases, it is advisable to exempt

portions of the contract from IPMR reporting if the portions do not meet the overall threshold or contract

type criteria. Generally, different contracting types are applied to different CLIN items, and these can then

be segregated within the WBS. When determining the contract value for the purpose of applying the

thresholds, use the total contract value of the portions of the contract that are cost reimbursable or

incentive, including planned options placed on contract at the time of award.

Keep in mind the potential impact to the CFSR, which can be applied to all contract types with the

exception of FFP. It may be advisable to call for separate reporting by contract type in the CFSR. The

following examples illustrate these concepts.

Example 1: The planned contract is a development contract with an expected award value of $200M. At

the time of award, the contract type is entirely Cost Plus Award Fee (CPAF). Subsequent to award, some

additional work is added to the contract on a T&M CLIN.

Solution: Apply full EVM and IPMR reporting at the time of award to the entire contract but exempt the

T&M efforts from IPMR reporting at the time they are added to the contract. However, the T&M efforts

extend over several years, and the PM wishes to have a separate forecast of expenditures and billings. The

CFSR data item is therefore amended to call for separate reports for the CPAF and T&M efforts.

Example 2: The planned contract is a mix of development and production efforts with an anticipated value

of $90M. At the time of award, the development effort is estimated at $10M under a CPAF CLIN, and the

production is priced as FFP for the remaining $80M.

Solution: After conducting a risk assessment, the PM concluded that the risk did not justify EVM and

IPMR reporting on the FFP production effort and that there was not sufficient schedule risk to justify an

IMS. The PM noted that the development effort fell below the mandatory $20M threshold and, based on

a risk evaluation, determined that EVM was not applicable. However, a CFSR is determined to be

appropriate for the development portion of the contract to monitor expenditures and billings. A CFSR

would not be appropriate for production, as it is priced as FFP.

Example 3: A planned contract calls for development and maintenance of software. The overall value of

the development portion is $30M, and the maintenance portion is $170M. Development is placed on a

CPIF CLIN, while maintenance is spread over several Cost Plus Fixed Fee (CPFF) CLINs. It is anticipated

that the majority of the maintenance effort should be LOE. The PM is concerned about proper segregation

of costs between the efforts and has determined that there is significant schedule risk in development. The

PM is also concerned about agreeing up front to exclude the maintenance portion from EVM reporting.

Since there is a specified reliability threshold that is maintained during the operational phase, performance

risk has been designated as moderate. There are key maintenance tasks that can be measured against the

reliability threshold.

DoD EVMIG

14

Solution: Place EVMS DFARS on the contract and apply IPMR reporting to the development portion at

the time of contract award. Specific thresholds are established at contract award for variance reporting for

the development effort. IPMR reporting is also applied to the maintenance portion of the contract. Format

1 reporting is established at a high level of the WBS, with Format 5 reporting thresholds for maintenance

to be re-evaluated after review of the EVM methodology during the IBR. Variance reporting then

specifically excludes WBS elements that are determined to be LOE. CFSR reporting is also required for

the entire contract with a requirement to prepare separate reports for the development and maintenance

portions, as they are funded from separate appropriations. Format 6 is required for the development effort

but not for the maintenance effort. A CAE waiver is provided to allow for departure from the required 7

Formats.

Example 4: An IDIQ contract is awarded for a total value of $85M. The delivery/task orders include four

delivery/task orders for software development, each under $20M, each with a CPIF or CPFF contract type.

Each delivery/task order’s scope is for a software iteration that culminates in a complete software product.

There is also a material delivery/task order for material purchases of $26M. The estimated contract values

of the delivery/task orders are as follows:

Delivery/Task Order 1: $26M FFP for material purchases (i.e., computers and licenses)

Delivery/Task Order 2: $15M CPIF software development, iteration #1, 12 months

Delivery/Task Order 3: $11M CPIF software development, iteration #2, 12 months

Delivery/Task Order 4: $16M CPFF software development, iteration #3, 12 months

Delivery/Task Order 5: $17M CPFF software development, iteration #4, 12 months

Solution: Each delivery/task order can have different contract types. An IDIQ contract can be awarded to

a single vendor or multiple vendors. Per DoDI 5000.02, for IDIQ contracts, inclusion of EVM

requirements is based on the estimated ceiling of the total IDIQ contract, and then is applied to the

individual task/delivery orders, or group(s) of related task/delivery orders, that meet or are expected to

meet the conditions of contract type, value, duration, and work scope. The EVM requirements should be

placed on the base IDIQ contract and applied to the task/delivery orders, or group(s) of related

task/delivery orders. “Related” refers to dependent efforts that can be measured and scheduled across

task/delivery orders. The summation of the cost reimbursement software development delivery orders is

$59M (i.e., delivery orders 2-5). These are a group of related delivery orders. The EVMS DFARS should

be placed on the base contract and each of the delivery orders within this group. IPMR reporting for all 7

Formats should be applied.

Example 5: A planned contract calls for discrete and LOE type CLINs and is CPAF. This effort is

primarily to provide the execution of Post Shakedown Availabilities for four ships, which includes support

for tests and trials and a relatively small amount of materials may be required. Each Post Shakedown

Availability is a discrete effort that lasts for 12-16 weeks and the Independent Government Estimate states

that on average each Post Shakedown Availability will cost about $17.5M (i.e., $8M under completion

type CLINs and $9.5M under LOE type CLINs). Altogether for four ships, the anticipated contract value

is approximately $70M, of which $32M is completion type and $38M is LOE type. The PM intends on

tailoring IPMR in order to get insight into program status.

Solution: Using the calculations provided there is a total of $32M of completion type CLINS on this

CPAF contract. Using the contract type and dollar thresholds only, the EVMS DFARS would be applied

DoD EVMIG

15

on the contract since $32M is greater than $20M. However, the scope as described is not the type of scope

that would benefit from adhering to a compliant EVMS. Therefore, an EVM applicability determination

from the cognizant official to not apply EVM should be pursued. The EVM applicability decision should

describe the scope of work and the alternative approach planned to ensure insight into program status. In

this case, the PM has decided to use a tailored IPMR. For the $38M of LOE scope, an applicability

determination from the cognizant official should also be pursued.

In conclusion, every contract is carefully examined to determine the proper application of reporting. The

preceding examples were shown to illustrate the various factors to evaluate in order to determine the

appropriate level of reporting. Every contract is different, and the analyst is encouraged to work with the

PM and EV focal points to determine the appropriate requirements.

2.2.2.7 Integrated Master Schedule (IMS) Applicability and Exclusions

The IPMR, Format 6 (IMS) is mandatory in all cases where EVM is mandatory; however, the IMS may

be required when there is no EVM requirement. To require an IMS without an EVM DFARS requirement,

the PMO may use the IPMR, Format 6 to apply only the IMS. FFP contracts where there is schedule risk

may consider application of the Format 6. However, since the IMS is a network-based schedule, an IMS

may not be appropriate for FRP efforts that contain primarily recurring activity and are not suitable for

networking. These contracts are generally planned and managed using production schedules such as Line

of Balance (LOB) or M/ERP schedules, providing sufficient detail to manage the work.

2.2.2.8 EVM Applicability Determination and Exclusion Waivers

Per the DoDI 5000.02, when a contract meets the contract criteria (type, dollar, duration) thresholds for

EVM application, EVM is then applied. A work attributes review can be completed to determine the

applicability of EVM to the work scope.. For contracts where USD A&S is the MDA/DAE, AAP reviews

and approves EVM applicability in coordination with the appropriate Service/Agency EVM focal point.

For all other Acquisition Category (ACAT) program contracts, the Service/Agency CAE or designee

determines EVM applicability. If AAP, the CAE, or designee determines that EVM does not apply based

on the nature of the work scope, then EVM is not required to be placed on contract (i.e., no DFARS

deviation is required). See Figure 2 below for the decision process for EVM application.

In some cases, the contract may meet the contract criteria thresholds and EVM applicability determination

based on work scope, but the PM still wishes to exempt EVM for other reasons. In those cases, the

appropriate authority must review and approve the exclusion of DFARS clauses and waivers of mandatory

reporting. A situational example is the award of a “Fixed Price Incentive” contract in a mature, production

environment, which establishes an overall price ceiling and gives the contractor some degree of cost

responsibility in the interim before a firm arrangement can be negotiated. The PM evaluates the risk in the

contract effort and requests an EVM waiver through its component EVM focal point for appropriate

authority evaluation to waive EVM. However, if a program has received a determination of non-

applicability, then a DFARS waiver or deviation is not required.

2.2.2.9 Support and Advice

In structuring a procurement to include EVM requirements, those preparing the solicitation package

should seek the advice and guidance of their component EVM focal point.

DoD EVMIG

16

FIGURE 2: DECISION PROCESS FOR EVM APPLICATION

*NOTE ON FIGURE 2: DECISION PROCESS FOR EVM APPLICATION: The PM has the option

to make a business case to apply EVM outside the thresholds and application decision.

2.2.2.10 Earned Value Management Central Repository (EVM-CR)/Format of IPMR Delivery

The DoD established a single Earned Value Management Central Repository (EVM-CR) as the

authoritative source for EVM data on ACAT 1 programs. The EVM-CR business rules and processes

control and provide timely access to EVM data for the Office of the Secretary of Defense (OSD), the

Services, and the DoD Components. Accordingly, all DoD contractors for ACAT I programs with EVM

requirements submit their IPMRs and CFSRs to the EVM-CR. The EVM-CR provides capability to

upload, review, approve, and download all EVM reporting documents. To be the authoritative source of

contract EVM data, the data is provided directly by the contractor and reviewed and approved by the

Government PMO. Government EVM analysts reviewing contractor submissions should be

knowledgeable of the EVM-CR and how to set up the reporting streams, which facilitate initial

submissions made by the contractor. Note: The EVM-CR is an unclassified system.

All formats should be submitted electronically in accordance with the DoD-approved schemas as

described in the IPMR DID. The Contract Data Requirements List (CDRL) specifies reporting

requirements.

Any program with EVM reporting requirements regardless of ACAT level can use the EVM-CR to collect

and store EVM reporting data.

DoD EVMIG

17

2.2.3 General Guidance for Program Managers

2.2.3.1 Work Breakdown Structure (WBS)

Developed by the PM and the PMO staff early in the program planning phase, the Program Work

Breakdown Structure (PWBS) is a key document. The WBS forms the basis for the SOW, SE plans, IMS,

EVMS, and other status reporting (see MIL-STD-881, Work Breakdown Structure Standard, for further

guidance).

2.2.3.2 Program Manager Responsibilities

The PM has the responsibility to follow current DoD policy in applying EVM and IMS requirements to

the proposed contract. The contract SOW and the applicable solicitation/contract clauses define EVMS

requirements (see Paragraphs 2.2.5.2 and 2.2.5.3 for additional guidance).

As previously stated, the CDRL defines EVM reporting requirements IAW DI-MGMT-81861 Integrated

Program Management Report (IPMR). The PM should tailor reporting requirements based on a realistic

assessment of management information needs for effective program control within the requirements

prescribed in DI-MGMT-81861 and the IPMR Implementation Guide. The PM can tailor requirements

that optimize contract visibility while minimizing intrusion into the contractor’s operations. Government

reporting requirements are to be specified separately in the contract using a CDRL (DD Form 1423-1 or

equivalent). The solicitation document and the contract should contain these requirements. The PM is also

engaged in the evaluation of the proposed EVMS during source selection. See Appendix D: Sample Award

Fee Criteria for examples that can be used as a summary checklist of implementation actions.

2.2.4 Acquisition Strategy/Acquisition Plan

The AS describes the PM’s plan to achieve program execution and programmatic goals across the entire

program life cycle. A key document in the pre-contract phase, the AS details the process for procuring the

required hardware, software, and/or services.

The Acquisition Plan reflects the specific actions necessary to execute the approach established in the

approved AS and guiding contractual implementation. The procuring activity should explain in the

management section of the Acquisition Plan the reason for selection of contract type and the risk

assessment results leading to plans for managing cost, schedule, and technical performance. Refer to the

FAR, Subpart 7.1.

2.2.5 Preparation of the Solicitation

2.2.5.1 Major Areas

Four major areas of the solicitation package should address EVM requirements: WBS, DFARS Clauses,

SOW, and CDRL. Of these areas, determine the latest revision of the document to apply to the contract;

each area is described in more detail in the following sections.

WBS

Describes the underlying product-oriented framework for program

planning and reporting

DFARS Clauses

Requires the contractor to use a compliant EVMS and may require the

contractor to use an approved EVMS

SOW

Describes the work to be done by the contractor, including data items

CDRL

Describes the government’s tailored requirements for each data item

DoD EVMIG

18

2.2.5.2 Work Breakdown Structure

As discussed previously in Paragraph 2.2.3.1 Work Breakdown Structure (WBS), the PM and PMO staff

should develop the WBS very early in the program planning phase. The PWBS contains all WBS elements

needed to define the entire program, including government activities. The Contract Work Breakdown

Structure (CWBS) is the government-approved WBS for reporting purposes and its discretionary

extension to lower levels by the contractor, in accordance with government direction and the SOW. It

includes all the elements for the products (i.e., hardware, software, data, or services) that are the

responsibility of the contractor. The government should speak to the contractor to ensure the WBS

structure aligns with how the contractor will actually manage the work. The contractor’s internal WBS

may differ from the cost reporting structure; however, the internal WBS should be mapped to the cost

reporting structure. Additionally, the WBS used for IPMR reporting may differ from the cost reporting

structure.

2.2.5.3 Defense Federal Acquisition Regulation Supplement (DFARS) Clauses

Include the appropriate DFARS provisions and clauses in the solicitation and the resulting contract (see

Figure 3). The same provisions and clauses go in the solicitation or contract regardless of dollar value.

However, the offeror has different response options based on the dollar value of the effort. The figure

shows these options when an RFP has the EVMS provision.

See http://www.acq.osd.mil/dpap/dars/dfars/html/current for the latest version of the clauses.

EVMS Provision and Clause greater than $100M

252.234

-7001

Solicitation

Requires compliance with the Guidelines. Contractor shall

assert that it has an approved system or show a plan to achieve

system approval.

252.234

-7002

Solicitations,

Contract

Contractor shall use the approved system in contract

performance or shall use the current system and take necessary

actions to meet the milestones in the contractor’s EVMS Plan.

Requires IBRs. Approval of system changes and Over Target

Baseline (OTB) / Over Target Schedule (OTS). Access to data

for surveillance. Applicable to subs.

252.242

-7005

Contract

System disapproval and contract withholds may result if

significant deficiencies exist in the EVMS as identified by the

ACO.

EVMS Provision and Clause less than $100M

252.234

-7001

Solicitation

Provides a written summary of management procedures or

asserts the contractor has an approved system. RFP states that

government system approval is not required.

252.234

-7002

Solicitations,

Contract

Contractor shall comply with the Guidelines in contract

performance, but system approval is not required. Requires

IBRs. Approval of OTB/OTS and notification of system

changes. Access to data for surveillance. Applicable to subs.

DoD EVMIG

19

252.242

-7005

Contract

System disapproval and contract withholds may result if

significant deficiencies exist in the EVMS as identified by the

ACO.

FIGURE 3: DFARS CLAUSES

NOTE: Until there is a final rule on the new DFARS clauses, use the existing clauses.

For contracts valued less than $100M, inclusion of the following paragraph in the SOW is suggested: “In

regards to DFARS 252.234-7001 and 252.234-7002, the contractor is required to have an EVMS that

complies with the EVMS Guidelines; however, the government will not formally accept the contractor’s

management system (no compliance review).”

2.2.5.4 Statement of Work (SOW)

The SOW should contain the following requirements. See Appendix F: Sample SOW Paragraphs for

sample SOW paragraphs.

• Contractor should develop the CWBS to the level needed for adequate management and control of

the contractual effort.

• Contractor should perform the contract technical effort using a Guidelines-compliant EVMS that

correlates cost and schedule performance with technical progress. The SOW should call for

presentation and discussion of progress and problems in periodic program management reviews.

Cover technical issues in terms of performance goals, exit criteria, schedule progress, risk, and cost

impact.

• The SOW should also contain and describe the requirement for the IBR process. This establishes the

requirement for the initial IBR within 180 days after contract award/Authorization to Proceed (ATP)

and for incremental IBRs as needed throughout the life of the contract for major contract changes

involving replanning or detail planning of the next phase of program. In case of Undefinitized

Contract Actions, IBRs should be held incrementally and not delayed until the contract is fully

definitized.

• Major subcontractors should be identified by name or subcontracted effort. If subcontractors are not

known at time of solicitation, they should be designated for EVM compliance or flow down of

EVMS compliance to subcontractors.

• Integrated program management reporting should require an IPMR, a CFSR, and a CWBS with

dictionary. Data items are called out by parenthetical references at the end of the appropriate SOW

paragraph. Specify if subcontractor IPMR reports are to be included as attachments to the prime

contractor reports.

2.2.5.5 Contract Data Requirements List (CDRL)

While excessive cost and schedule reporting requirements can be a source of increased contract costs,

careful consideration when preparing the CDRL ensures that it identifies the appropriate data needs of the

program and the appropriate DID. In Block 16 of the DD Form 1423-1, pay particular attention to the

items in DI-MGMT-81861, which require the PMO to define tailoring opportunities (i.e., variance

reporting selection, Format 1 reporting, etc.). The CDRL provides contractual direction for preparation

and submission of reports, including reporting frequency, distribution, and tailoring instructions. DD Form

1423-1 specifies the data requirements and delivery information.

DoD EVMIG

20

The level of detail in the EVM reporting, which is placed on contract in a CDRL referencing the IPMR,

should also be based on scope, complexity, and level of risk. The IPMR’s primary value to the government

is its utility in reflecting current contract status and projecting future contract performance. It is used by

the DoD component staff, including PMs, engineers, cost estimators, and financial management personnel

as a basis for communicating performance status with the contractor. In establishing the cost and schedule

reporting requirements, the PM shall limit the reporting to what can and should be effectively used. The

PM shall consider the level of information to be used by key stakeholders beyond the PMO. When

established comprehensively and consistently with CWBS-based reports, EVM data is an invaluable

resource for DoD analysis and understanding. Consider how the PMO is or may be organized to manage

the effort, and tailor the reporting to those needs.

The government should consider the management structure and reporting levels prior to RFP and during

negotiations with the contractor when the government identifies a WBS and contract data requirements.

The contractor often uses the framework defined in the RFP to establish its planning and management

infrastructure, including the establishment of CAs, WPs, and charge numbers. Decisions made prior to

RFP have direct impact on the resources employed by the contract in the implementation of the EVMS

and data available to the government.

When finalizing contract documentation, determine the last significant milestone or deliverable early and

include it in the CDRL Block 16. Forward thinking minimizes required contract changes at the end of the

program Period of Performance when it is time to adjust or cease EVM reporting on the contract.

NOTE: The EVM data provided by the contractor can provide a secondary benefit to the cost estimators

during the CSDR planning process. IPMR reporting should be managed by the PMO to include

considerations from the cost, engineering, logistics, and other Government communities in order to ensure

the data will be of use in the future. While the PMO team manages the EVM data process, several other

communities rely on this information to make data-driven predictions of future program costs and

performance characteristics.

2.2.5.5.1 Electronic Data Submission

All formats should be submitted electronically in accordance with DoD-approved schemas posted on the

EVM-CR website. The government may also require native scheduling formats in the CDRL down to the

reporting level as part of the IPMR submissions.

2.2.5.5.2 General Tailoring Guidelines

All parts of DIDs can be tailored as necessary per the tailoring guidance contained in this guide. However,

there are prohibitions against adding requirements beyond the standard DID. Tailoring is accomplished

via the DD 1423-1, CDRL form. Any tailoring instructions, such as frequency, depth, or formats required,

are annotated on the CDRL forms.

The program office should have an internal process to review and approve all CDRLs for the contract.

The EVM analysts at each acquisition command should provide assistance in tailoring the IPMR. The