AEROSPACE REPORT NO.

TOR-2009(8591)-11

Design Assurance Guide

4 June 2009

Joseph A. Aguilar

Vehicle Concepts Department

Architecture and Design Subdivision

Prepared for:

Space and Missile Systems Center

Air Force Space Command

483 N. Aviation Blvd.

El Segundo, CA 90245-2808

Contract No. FA8802-09-C-0001

Authorized by: Space Systems Group

Developed in conjunction with Government and Industry contributions as part of the

U.S. Space Programs Mission Assurance Improvement workshop.

APPROVED FOR PUBLIC RELEASE; DISTRIBUTION UNLIMITED

AEROSPACE REPORT NO.

TOR-2009(8591)-11

Design Assurance Guide

4 June 2009

Joseph A. Aguilar

Vehicle Concepts Department

Architecture and Design Subdivision

Prepared for:

Space and Missile Systems Center

Air Force Space Command

483 N. Aviation Blvd.

El Segundo, CA 90245-2808

Contract No. FA8802-09-C-0001

Authorized by: Space Systems Group

Developed in conjunction with Government and Industry contributions as part of the

U.S. Space Programs Mission Assurance Improvement workshop.

APPROVED FOR PUBLIC RELEASE; DISTRIBUTION UNLIMITED

AEROSPACE REPORT NO.

TOR-2009(8591

)-11

Design Assurance Guide

Approved by:

Th6.rman . aas, Pnnclpal Drrector

Robe innichelli, Principal Director

Office

of

Mission Assurance and Program

Arc ltecture and Design Subdivision

Executive

Systems Engineering Division

National Systems Group

Engineering and Technology Group

All trademarks, service marks, and trade names are the property

of

their respective owners.

SI0288(2, 5521, 77, KCZ)

11

iii

Abstract

A large percentage of failures and anomalies that occur in the implementation phase of space

programs are attributed to errors or escapes originating in the design process. Across the

aerospace industry there are seminal but separate/independent efforts underway to develop

approaches to discover, prevent, and correct engineering process errors or escapes earlier in the

life cycle where these problems are less expensive or even possible to correct. A sufficient,

foundational set of design assurance requirements and processes that are analogous to product

quality assurance do not exist for engineering design assurance. In the manner of the quality

management system defined in AS9100 (Revision C), it is believed necessary to adapt these

process concepts earlier in the design and development process [1].

The cross-discipline, multi-company Design Assurance Topic Team developed a definition of

design assurance, identified key design assurance enterprise attributes and program elements, and

formulated a risk-based design assurance process flow, which can serve as a roadmap for

aerospace programs’ design assurance activities.

iv

Acknowledgements

This document was created by multiple authors throughout the government and the aerospace

industry. For their content contributions, we thank the following contributing authors for making

this collaborative effort possible:

Tina Wang—Boeing Space and Intelligence Systems

Dave Pinkley—Ball Aerospace & Technologies Corp

Chris Kelly—Northrop Grumman Aerospace Systems

Ken Shuey—Lockheed Martin Space Systems Corporation

Bob Torczyner—Lockheed Martin Space Systems Corporation

Daniel Nigg—The Aerospace Corporation

Alan Exley—Raytheon Space and Airborne Systems

A special thank you for co-leading this team and efforts to ensure completeness and quality of

this document goes to:

Ty Smith—Northrop Grumman Aerospace Systems

Joseph Aguilar—The Aerospace Corporation

v

Contents

1. Introduction ..................................................................................................................................... 1

1.1 Purpose and Objective ............................................................................................................ 1

1.2 Definition ................................................................................................................................ 2

1.3 Further Explanation ................................................................................................................ 2

1.4 Why Use Design Assurance .................................................................................................... 2

1.5 How to Use the Guide ............................................................................................................. 3

1.6 Design Assurance Topic Team ............................................................................................... 3

2. Design Assurance Framework ........................................................................................................... 5

2.1 Engineering and Design Related Roles .................................................................................. 5

2.2 Design Assurance Process Owner .......................................................................................... 6

2.3 Enterprise Attributes Maturity Assessment ............................................................................ 7

2.4 Design Assurance Implementation/Execution ........................................................................ 8

3. Design Assurance Program Implementation/Execution Process ..................................................... 11

3.1 Plan Program ........................................................................................................................ 11

3.2 Independent Baseline Assessment ....................................................................................... 12

3.3 Plan Activity ......................................................................................................................... 14

3.4 Execute Activity ................................................................................................................... 18

3.5 Monitor/Report Findings ...................................................................................................... 19

4. Conclusions ................................................................................................................................... 21

Appendix A.

Failures and Design Assurance ......................................................................................... 23

Appendix B. Acquisition Life Cycle and Design Assurance .................................................................. 24

Appendix C. Design Assurance Enterprise Attributes/Capability Checklist .......................................... 25

Appendix D. Spider/Radar Diagram Examples ...................................................................................... 53

Appendix E. Design Assurance Program Elements ............................................................................... 55

Appendix F. Frequently Asked Questions ............................................................................................. 63

Appendix G. Useful References ............................................................................................................. 67

Appendix H. Glossary ............................................................................................................................ 70

vi

Figures

Figure 1. Design assurance framework. ................................................................................................. 5

Figure 2. Design assurance process flow. ............................................................................................ 11

Figure A-1. Past (1997) on-orbit failure causes. ...................................................................................... 23

Figure B-1. Design assurance and the defense acquisition management system [8]. .............................. 24

Figure D-1. Spider/Radar diagram, design assurance process enterprise attributes. ................................ 53

Figure D-2. Spider/Radar diagram, design engineering tools and design assurance supplier

assessment enterprise attributes ............................................................................................ 54

Figure F-1. National Design Assurance program cost. ............................................................................ 63

Tables

Table 1. Design Assurance Topic Team ................................................................................................. 3

Table 2. Design Assurance Plan Program Sub-Process ........................................................................ 12

Table 3. Design Assurance Independent Baseline Assessment Sub-Process ....................................... 13

Table 4. Design Assurance Plan Activity Sub-Process ........................................................................ 15

Table 5. Actions for Low, Medium, and High Risks ............................................................................ 16

Table 6. Actions for Low, Medium, and High Risks ............................................................................ 17

Table 7. Design Assurance Execute Activity Sub-Process................................................................... 18

Table 8. Design Assurance Monitor/Report Findings Sub-Process...................................................... 20

Table C-1. Generic Maturity Level Descriptions ..................................................................................... 26

Table E-1. Program Elements .................................................................................................................. 55

1

During the past several years, National Security Space (NSS) assets have been subject to an

unacceptable increase in the number of preventable on-orbit anomalies. The reversal of this

trend and the reestablishment of acceptably high levels of mission success have been

identified as the highest priority for the NSS acquisition community… Recent authoritative

studies such as the Tom Young Report have stated unequivocally that in order to achieve

mission success it is necessary to re-invigorate and apply with renewed rigor, i.e., in a formal

and disciplined manner, the principles and practices of mission assurance in all phases of

NSS space programs. – Aerospace Mission Assurance Guide[2]

1. Introduction

This Design Assurance Guide (DAG)

*

describes a process for performing the design assurance (DA)

activities and processes independent of any of the constraints of any specific organizational structure.

The Guide is intended for use by any organization involved in the acquisition of a space system. The

DA process is applied at the enterprise and program levels using enterprise and program resources.

The information in the appendices supports the DAG and is referred to in the Guide. A listing of the

material contained in the supporting appendices is as follows:

Appendix A: Failures and Design Assurance

Appendix B: Acquisition Life Cycle and Design Assurance

Appendix C: Design Assurance Enterprise Attributes/Capability Checklist

Appendix D: Spider/Radar Diagram Examples

Appendix E: Design Assurance Program Elements

Appendix F: Frequently Asked Questions

Appendix G: Useful References

Appendix H: Glossary

The intent of what is being discussed is not to create a new DA process that has to be integrated into

existing processes. Rather, a process is being described that makes use of existing processes that are

already being used in the industry.

1.1 Purpose and Objective

The purpose of the DAG is to reduce or eliminate the escapes or omissions, linked to design, and to

ensure design integrity and robustness while maintaining efficiency. Recent Aerospace studies

strongly suggest that the dominant root cause for 40% of recent on-orbit failures are design issues [3].

*

Design Assurance Guide and Guide are interchangeable terms.

2

Additionally, design issues have significantly increased over the last 10 years [3]. See Appendix A,

Failures and Design Assurance, for additional information.

The objective of the DAG is to provide a DA process that uncovers undiscovered or unidentified

design risks so these design risks can be prevented or the cause corrected as early in the design

cycle as possible. See Appendix B, Acquisition Life Cycle and Design Assurance, for additional

information. The DAG presents a two-level approach to DA: One, an assessment of the enterprise-

level infrastructure supporting design, and two, an independent assessment of the program

design activity.

1.2 Definition

Design assurance is a formal, systematic process that augments the design effort and increases the

probability of product design conformance to requirements and mission needs. The activity associated

with design assurance has, as its objective, a truly independent assessment of the overall process for

development of engineering drawings/models/analyses and specifications necessary to physically and

to functionally describe the intended product, as well as all engineering documentation required to

support the procurement, manufacture, test, delivery, use, and maintenance of the product.

1.3 Further Explanation

DA is a mission assurance function applied to design activities throughout the program life cycle,

similar to product assurance or quality assurance activities which more typically apply to the

manufacturing, integration, test, and logistics phases of a program life cycle. DA takes into account

the user’s mission needs, which are translated into requirements, standards, and design

documentation. Design engineering performs the initial review of requirements and lays out the

building blocks of the design and should consider areas such as reliability, maintainability,

producability, testability, etc. Systems engineering performs additional elements of design (e.g.,

interface controls, requirements allocation and flow-down, systems analyses, etc.). Product

engineering verifies that the final product is produced and tested using the appropriate practices and

processes.

In order to be unbiased, DA activities need to be performed by experts that are largely independent of

the day-to-day design and systems engineering efforts to increase the likelihood that the design meets

or exceeds customer expectations in function and performance. Having experts that are truly

independent (having no organizational affiliation or program involvement) of the program may not be

possible. What is important is that they provide unbiased and uncompromised assessments free from

any conflicts of interest with the program, such as an independent reporting path. Subject matter

experts supporting the independent DA assessment may come from the systems engineering

organization or other disciplines associated with the design.

1.4 Why Use Design Assurance

DA is comprised of independent assessments used to identify possible design escapes as early as

possible in the design life cycle and is a tool for determining the adequacy and efficacy of engineering

design processes and products. DA increases the likelihood that the design meets or exceeds customer

3

expectations in function and performance. While DA incorporates an independent element into the

program, DA is meant to provide the program additional assistance and not be a hindrance.

The reach back and experiences of the DA team are greater than those available to any single

program. The DA team will work with the program to make use of those resources to ensure program

success.

1.5 How to Use the Guide

The DA process has been developed so it is general enough to work within the unique environment

and culture of a given contractor, federally-funded research and development center, or government

agency.

Most organizations are likely already using many facets of DA that are the subject of this Guide. This

Guide is a framework that describes the DA process and DA tools and outlines what is considered to

be the must do’s for DA activities.

1.6 Design Assurance Topic Team

The DA Topic Team (Topic Team), listed in Table 1, studied the current best practices and literature

on DA and developed the DA process in the Guide.

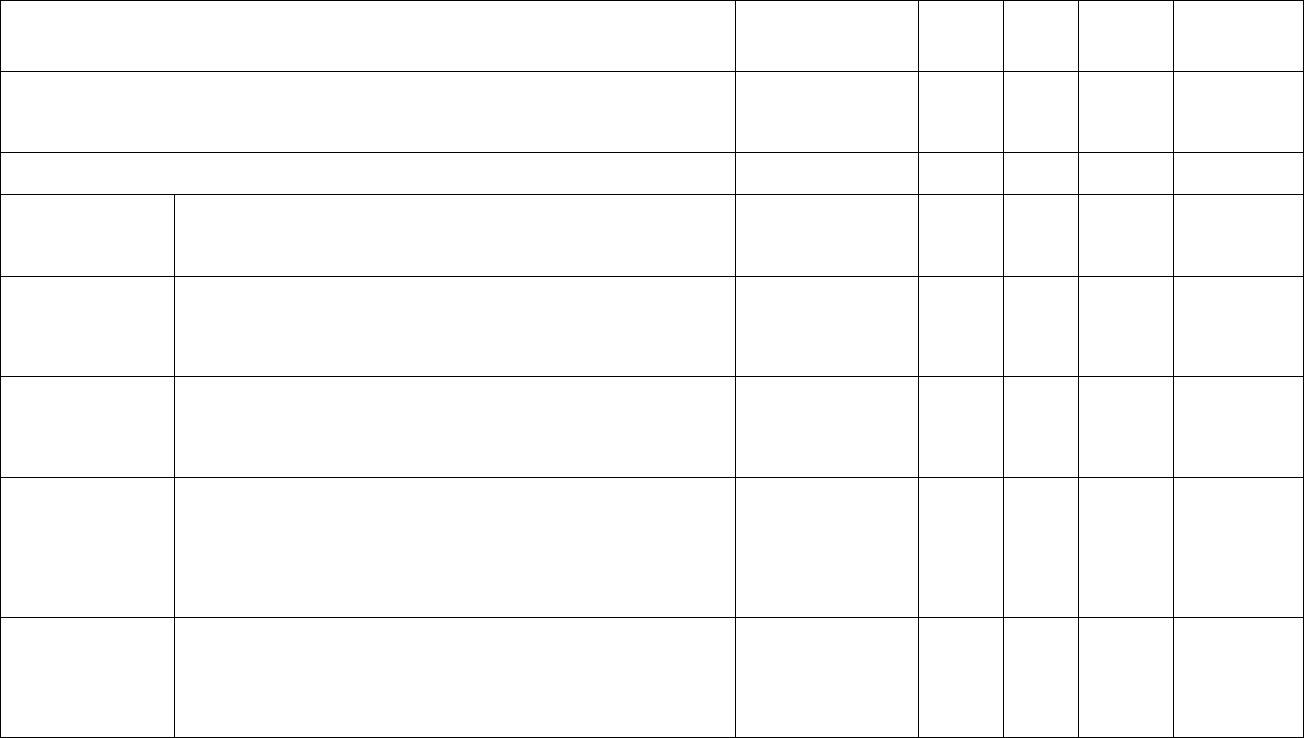

Table 1. Design Assurance Topic Team

Company Role Name Phone E-mail

NGAS Chair Ty Smith 310-813-1696 ty.sm[email protected]

NGAS Member Chris Kelly 310-813-8655 chris.kell[email protected]

Aerospace Co-chair Joseph Aguilar 310-336-2179 joseph.a.aguil[email protected]g

Aerospace Member Daniel Nigg 310-336-2205 daniel.a.nigg@aero.org

Boeing Member Tina Wang 310-364-5360 christine.l.wang@boeing.com

LMCO Member Ken Shuey 408-743-2487 ken.shuey@lmco.com

LMCO Member Bob Torczyner 408-756-7844 bob.torczyner@lmco.com

Raytheon Member Alan Exley 310-647-4016 alan_d_exley@raytheon.com

NGAS = Northrop Grumman Aerospace Systems; LMCO = Lockeed Martin Corporation.

4

5

2. Design Assurance Framework

The DA framework, Figure 2.1, shows how DA integrates into a representative enterprise

functions/program environment infrastructure.

Figure 1. Design assurance framework.

The DA framework combines the core responsibility and accountability of the engineering and design

organizations for people, processes, and tools with the program responsibility and accountability for

execution to meet customer design requirements. The DA team must have the technical expertise

appropriate to review design-related processes and products. The key point of Figure 1 is that the DA

process is independent from engineering design organizations and programs, both of whom are

responsible for providing DA enterprise attributes. These enterprise attributes enable execution of the

DA process on each program. It is acknowledged that customers’ needs influence both the enterprise

and program process and activities.

2.1 Engineering and Design Related Roles

The engineering (e.g. systems engineering) and design-related roles include the enabling functions

with respect to people, processes, and tools to support the DA process. These include:

Create and maintain the command media that defines the design process

6

Identify and maintain the design and analysis tools

Provide trained and knowledgeable design process performers

Provide subject matter experts to support the DA assessments

Incorporate lessons learned to continuously improve design guidance documents and training

Ensure functional discipline to core processes

Develop best practices and leverage best practices from other organizations

2.2 Design Assurance Process Owner

Mission assurance (e.g., quality, mission excellence, mission success, reliability, etc.) is the DA

Process Owner. The DA Process Owner should be a technical organization that is independent of the

design organization. The mission assurance organization ensures the DA process, described in

Section 3, is implemented. A DA technical team will be assembled that will have the responsibility

for carrying out the DA activities. The DA Process Owner responsibilities include:

Assembling the DA team and provide the personnel to chair or conduct the independent DA

assessments. The DA team shall be a cross-functional team comprised of subject matter

experts representing the applicable technical disciplines (e.g. subject matter experts may

come from other organizations besides the DA/mission assurance organization).

Defining the DA process and create/maintain the DA command media.

Assuring that DA processes are well defined, conformant, and that there exists a

knowledgeable and competent source of resources to perform the design and development

process.

Evaluating the effectiveness of DA enterprise attributes infrastructure within the Engineering

and Design Functional Organizations independent of the program.

Evaluating the process based on the DA enterprise attributes relevant to the specific program

under evaluation.

Selecting DA activity lead and ensure completion.

Incorporating DA process lessons learned.

It is important that the individual(s) leading the DA process have experience relevant to the DA

activities being performed. They should have been significantly involved in executing design and

programs to understand program design constraints of the specific mission. Also, they should

understand the breadth and depth of the functional areas such as engineering, quality control, supplier

control, and manufacturing.

7

2.3 Enterprise Attributes Maturity Assessment

DA involves many aspects of a company, both at an enterprise level and a program level. In this

document, the enterprise-level capabilities are called DA enterprise attributes. Enterprise attributes

are implemented at a higher level and provide the framework within which the program design effort

is performed (e.g. they envelope the potential design space for the program under evaluation).

Enterprise attributes also include the command media (design standards or similar documentation)

that control the design effort and the infrastructure that is needed to create and maintain the integrity

of the design products such that they fully describe the intended product and support the manufacture,

test, delivery, use, and maintenance of the product.

A crucial step in performing an independent DA risk evaluation is a system level DA process gap

analysis in accordance with Appendix C, Design Assurance Enterprise Attributes/Capability

Checklist. Appendix C is a listing of the key enterprise attributes independent of any specific design

application related to DA and includes definitions, risk levels, and maturity rating descriptions. This

appendix can be used as a program resource to ensure coverage of key enterprise attributes to the

design, as a knowledge resource, to better understand specific design assessment attributes, and/or a

resource for evaluating (or analyzing) how well a company is implementing the DA process at any

given point in time. By understanding the aspects detailed in the different maturity ratings, an

organization can better understand what specific actions to implement to improve and mitigate

design risk.

Maturity levels are described for each enterprise attribute that range from an ideal implementation to

a more sporadic implementation of the DA process. As various changes commonly occur on

programs, assessments using the DA enterprise attributes maturity assessment should be performed

during the life cycle of the program and incorporated into DA program planning, discussed in Section

3.1. As DA processes become more mature, these enterprise attributes can and should evolve as risk

analysis and nonconformance and noncompliance trends are fully understood.

It is understood that each contractor, government agency, and federally-funded research and

development center will implement DA differently, and it is believed the DA process can add

significant value regardless of the specific implementation. By analyzing the enterprise attributes in

Appendix C, refining the DA process where appropriate, this DAG can improve the implementation

of DA within any organization or program. This includes external suppliers that have design authority

(e.g. suppliers that design and manufacture to build to specification).

The following is a list of 22 DA enterprise attributes that are in one of three categories: DA Process,

Design Engineering Tools, and DA Supplier Assessment.

DA Process

Dedicated design subject matter experts network

Integrated and cross functional design organizational structure with shared

responsibilities and accountabilities

Workforce capability and maturity

Lessons learned and significant risk mitigation actions continuously embedded into

command media and design guides

Process discipline—Consistency between documented processes and actual practice

8

Robust nonconformance and noncompliance processes

Useful engineering conformance and compliance metrics

Documented DA definition and process requirements and DA plan

Limited tailoring options for processes

Realistic cost, schedule, and resource estimates committed in program proposal

Defined product tailoring and design reuse

Completed and controlled design integration

Design Engineering Tools

Approved and common tools

Robust design guides available and accessible

Robust configuration and data management system

DA requirements assessment to verify and validate

Demonstrated technology readiness and manufacturing readiness

DA Supplier Assessment

Effective and integrated supplier program management

Controlled acceptance of supplier product/process

Robust flow down of requirements to suppliers

Early supplier involvement in design

Robust process for handling furnished and supplied equipment

The DA Supplier Assessment enterprise attributes apply to suppliers who provide both build-to-print

(suppliers do not have design authority) and build-to-specification (suppliers have design authority)

products. It is recommended that suppliers with significant design responsibility (e.g.,

teammates/partners), self-assess for all the DA enterprise attributes using the DA enterprise-attributes

capability checklist and DA activities be planned commensurate with risk. This assessment can be

reviewed by higher-level customer representatives as required.

This assessment can indicate the strengths and weaknesses of the existing core engineering and

design organizations. An overall numeric score can be determined and used as an improvement

metric. Associating applicable life-cycle product phases, gates, and/or reviews to each enterprise

attribute can help understand and plan when to assess an organization’s maturity or capability. The

expected goal and weight shown in the DA enterprise attributes/capability checklist can be tailored

for programs of different scope (e.g. internal research and development, space vehicle level program,

or system program). Goals can be set and to provide further clarity of DA gaps, the results can be

documented using a spider/radar diagram. See Appendix D, Spider/Radar Diagram Examples, for

additional information. Based on the results of gap analysis, the customer/mission assurance function

can review appropriate sub-processes to determine risk posture against the specific risk profile of

the program.

2.4 Design Assurance Implementation/Execution

As the DA framework combines the core responsibility and accountability of the engineering and

design organizations for people, processes, and tools with the program responsibility and

accountability for execution to meet customer design requirements, the effective implementation of

9

DA process requires a symbiotic partnership between the program and the enterprise organizations.

When the system level DA gap analysis reveals preventive and corrective action opportunities, the

functional organizations need to take ownership to address the actions that are systemic and affect

the enterprise (multiple programs will be affected and future programs will benefit) and the program

organizations need to take ownership to address the actions specifically related to their program’s

execution of the DA processes and tools necessary to achieve the design products required by the

customer. Having the functional organizations address the systemic actions will help increase

process and tool commonality in the long run. For example, a product alert should be addressed by

all the programs for containment and systemic corrective action should be elevated to an

appropriate preventive action board and/or corrective action board for review and systemic

corrective action implementation.

10

11

3. Design Assurance Program Implementation/Execution Process

The DA process the DA team will apply to the program, shown below the dotted line in Figure 1, is

explained in greater detail in Figure 2 below. On each program, this is the DA process the DA team

will cover. The DA process encompasses the following activities: program planning, independent

baseline assessment, activity planning, execution, and monitoring and reporting. The process flow of

these activities is shown in Figure 2.

Figure 2. Design assurance process flow.

The DA process flow will be responsive to customer requirements as described in the plan program

sub-process.

3.1 Plan Program

The first step in executing the DA process on a program is to develop the DA program plan. This plan

will establish the scope of the DA activities that will be executed independently of the program but

commensurate with program planning and identify specific areas of focus for mitigating program risk.

The DA plan program sub-process includes the following key steps: (1) collect all program

requirements documentation, (2) review program requirements documentation, and (3) complete

program DA plan. Table 2 summarizes the key inputs, process steps and outputs of the DA plan

program sub-process.

12

Table 2. Design Assurance Plan Program Sub-Process

Inputs

Key Steps of Process

Outputs

Customer and supplier requirements

o Contract and subcontracts

o Statement of work

o Terms and conditions

o Specifications and standards

o Plans and schedules

o Cost

o Risk posture

Design assurance program plan

template

Design assurance monitoring and

reporting feedback

Results of Enterprise Attributes

Maturity Assessment

Collect all program

requirements

documentation

Review requirements

documentation

Complete/update

design assurance plan

Design

assurance

program plan

DA planning begins as early in the program life cycle as possible. This should include risk reduction

and proposal activities. A key component of this step is the establishment of the program’s overall

risk profile. For instance, a concept development program will likely accept a higher level of design

risk than an operational program. This risk profile can then be decomposed to each of the key

program elements to establish guidance on what activities the DA team will execute based upon the

specific risk the design element embodies. Appendix E, Design Assurance Program Elements,

contains a draft list of program elements that can be addressed by DA.

The DA team works closely with program management and integrated product team leads to access

relevant design documentation specific to the program development phase and DA activity.

Documentation collected includes proposals, program plans, requirements, designs, cost, and

schedule information. DA planning should be developed sufficiently.

An important source of data for the DA team is risk, anomaly, and nonconformance data relevant to

the enterprise attributes under evaluation. This data could include: watch list items, preventive action

board actions and status, discrepancy reports, failure review board data, integration returns,

independent review team reports, incidents, and hardware issues. Based on this data the program plan

should be updated as needed.

The DA team will review the design documents and analyze anomaly data to assess the risk posture

of the design against its baseline risk profile. Findings will be used to provide additional focus to the

planned DA activities. These DA focus areas could be a functional element of the design, plans and

executability (schedule, cost, staffing), technology maturity, and/or process executability. The DA

product at this step in the process is the initial version of the DA plan.

3.2 Independent Baseline Assessment

The second step in executing the DA process on a program is to perform an independent assessment

of risk for the program and analyze the DA risk. DA risk identification is the independent activity that

13

examines selected risk elements of the program to identify the associated root causes for the negative

findings identified above, begin their documentation, and set the stage for the following DA activities.

The DA independent baseline assessment sub-process includes the following key steps: (1)

independent identification of design risks, and (2) initial DA baseline risk analysis. Table 3

summarizes the key inputs, process steps, and outputs of the DA independent baseline assessment

sub-process.

Table 3. Design Assurance Independent Baseline Assessment Sub-Process

Inputs Key Steps of Process

Outputs

Design assurance program plan

Design assurance monitoring and

reporting feedback

Design assurance Enterprise

Attributes Maturity Assessment

results

Government-industry data exchange

program/design alerts

Lessons learned

Best practices

Customer feedback

Material review board/failure review

board issues

Corrective action reports

Program risk list

Interviews with program,

engineering, mission

assurance/quality, etc.

Independent

identification of design

risks

Initial design assurance

baseline risk analysis

(functional,

programmatic, quality,

cost, schedule, etc.)

Independent

selection of

design

risks/issues to

perform design

assurance

activities

For programs to have a high potential for success, DA risk identification needs to begin as early as

possible and continue throughout the design life cycle with regular reviews and analyses of technical

performance measurements, schedule, resource data, life cycle cost information, earned value

management data/trends, progress against critical path, technical baseline maturity, safety, operational

readiness, and other program information available to the DA team members.

This step of the DA process provides for the independent identification of design risk and the initial

DA baseline risk analysis. The DA team will identify program design risks by addressing some of the

following:

Examining the technology readiness level of the program design elements.

Reviewing program planning for eliminating and mitigation or technology readiness level

risks.

Examining resource allocation including current and proposed staffing profiles, process,

design, supplier, operational employment dependencies, etc.

14

Reviewing program planning with respect to coverage of key DA enterprise attributes

relevant to the program phase and design attribute under evaluation.

Examining key technical performance metrics against margin requirements.

Reviewing analysis products and the program incorporation of those products for managing

DA risks.

Monitoring test results throughout the design life cycle especially test failures (e.g.

engineering models, failure review boards, etc.).

Reviewing any other potential design shortfalls against initial requirements allocation as the

design matures.

Analyzing negative trends, reduced margins, schedule slips, funding shortfalls, engineering

changes, audit findings, customer feedback, etc.

Analyzing signification issues that are active or open.

Reviewing lessons learned database (e.g. 100 Questions for Technical Review – see Appendix

G).

Review best practices (e.g. failure mode effects and criticality analysis, fault management,

systems engineering handbooks and guides – see Appendix G).

Reviewing results of DA enterprise attributes assessment

Evaluating risks from quality, functional, programmatic, cost/schedule aspect.

Interviewing key business and functional leaders and asking them what concerns them about

the program (e.g. if not enough resources, what on the work breakdown structure is not

getting done).

The aspects of DA that are applied at a program level are called DA program elements in this

document. Appendix E is a listing of the DA program elements which can be used as a tool during the

design process.

3.3 Plan Activity

The third step in executing the DA process on a program is to develop a DA activity plan. Different

than the DA program plan (or program quality plan), the DA activity plan includes the what, when,

who, and how the DA team will address the risks found. The activity plan will identify what specific

design risks and issues will be addressed, how they will be addressed, who will be addressing them,

and when they will be addressed. The DA plan does not need to be a separate plan and may be

included as an element of a broader mission assurance plan.

15

The DA plan activity sub-process includes the following key step: (1) develop the plan for executing

the DA activity. Table 4 summarizes the key inputs, process steps and outputs of the DA plan activity

sub-process.

Table 4. Design Assurance Plan Activity Sub-Process

Inputs

Key Steps of Process

Outputs

Independent selection of

design risks/issues to perform

design assurance activities

Develop the plan for executing

the design assurance activity

Design

assurance

activity plan

The DA activity plan may include the following for each risk or issue that will be assessed:

System level and lower level design reviews. If these reviews are already occurring on the

program, duplication is not necessary; however, specific issues in those reviews may require

more scrutiny based upon previous DA findings.

Detailed description of the actions to be taken on the designated design area and their

relationship to program activities and milestones.

Identify appropriate experts required to perform the activity.

Schedule of the activities which includes mitigation of specific DA risks.

Risk burn down could be accomplished by both reduction in likelihood and changing the

impact or consequence of the risk occurring.

Decision points will be established based on the finding from the activities.

Additional resources required including program and/or functional support.

The level of detail in the DA activity plan can be scoped down commensurate with program need.

In the previous step selected risk elements of the program were identified. The risks identified will

be technical, process, or executability risks. Appendix E, Design Assurance Program Elements, is

a guide that can be used to identify risk. Assuming that the risks have been identified as low,

medium, or high risk levels, the actions in Table 5 can be used as guidance to address the

programmatic risks.

Table 5. Actions for Low, Medium, and High Risks

Technical Risks Process Risks

Executability Risks

Low

Capture the technical parameters on

a watch list doing the following:

o Document the specific issue

for each parameter

o Identify the responsible

design owner

o Determine when the process

will be executed

o Determine the re-visit criteria

that could include schedule

or events

Capture the process metrics on a watch list doing

the following:

o Document the specific issue for each

process

o Identify the responsible process owner

o Determine when the process will be

executed

o Determine the re-visit criteria that could

include schedule or events

Work with specific program integrate

product teams to determine

executability risks against the

contractual requirements baseline

doing the following:

o Place areas with tight margins

on watch list

o Document the specific issue

o Identify the responsible design

owner

o Determine the re-visit criteria

that could include earned

value added performance

deviations and or

cost/schedule re-baselines

Medium

Perform an independent assessment

of the design doing the following:

o Review the specific design

artifacts

o Conduct interviews

o Participate in technical

working groups

o Participate in technical

reviews

Follow design threads top down,

bottoms up, and cross integrated

product teams. For tops down and

bottoms up, utilize information on

process and process flow, budgets

allocations, and common source

reuse. For cross integrated product

teams (at common level of design)

use similarity of designs and

components, and shared assembly

processes.

Perform a process compliance assessment doing

the following:

o Gather and review the current process

documentation and all relevant process

waivers for the program

o Notify the process owner and process

users that a process assessment will be

performed.

o Work with the process owner and process

users, schedule the assessment

o Request specific objective evidence from

process users that will be used to evaluate

process compliance

o Review the objective evidence to process

compliance

o Capture any findings and necessary

corrective actions

o Work with the process owner and the

program to reach closure on findings and

corrective actions

Work with specific integrated product

teams to compare the program

technical requirements baseline

against baseline costing including work

breakdown structure mapping, basis of

estimates, labor spreads, and capital

requirements doing the following:

o Participate in program costing

reviews

Assess integrated product teams in

formulating executability risks include

the risk to mission success at the

current apportionment and level of

funding and the de-scope(s) that would

be required to execute within current

budgets

16

Table 6. Actions for Low, Medium, and High Risks

Technical Risks Process Risks

Executability Risks

High

Perform an independent design

analysis doing the following:

o Execute a program

accepted design process

Perform a process compliance assessment doing

the following:

o Gather and review the current process

documentation and all relevant process

waivers for the program

o Notify the process owner and process

users that a process assessment will be

performed.

o Work with the process owner and

process users, schedule the assessment

o Request specific objective evidence from

process users that will be used to

evaluate process compliance

o Review the objective evidence to

process compliance

o Capture any findings and necessary

corrective actions

o Work with the process owner and the

program to reach closure on findings and

corrective actions

Perform an independent assessment

of the design doing the following:

o Review the program

technical requirements

baseline against baseline

costing including; work

breakdown structure

mapping, basis of estimates,

labor spreads, and capital

requirements

o Participate with the program

by conducting integrated

product team interviews and

participation in program

costing reviews

o Assess specific executability

risks and present to

program. This should include

the risk to mission success

at the current apportionment

and level of funding and de-

scope(s) that would be

required to execute within

current budgets.

The actions in Table 3.3.2 can be used as guidance to address the programmatic risks.

17

18

Organizations should use their own risk-management process to quantify risks to add in decision

making and risk mitigation resource allocation. As an example the Risk Management Guide for

DOD Acquisitions [4] and ISO 17666: Space Systems – Risk Management, 1

st

Ed. [5] could be

used (see Appendix G).

The following is an example of an activity in the DA activity plan. During the DA risk process it

was identified that the program’s qualification by similarity process was not robust. The activity

plan would review multiple qualification by similarity packages. This review would generate risk

findings that the DA team communicates to the program and functional groups. The DA team

stays engaged with the program and functional organization to help plan an activity to burn down

risk. DA success is increased as DA becomes active team player/partner in planning activity to

burn down risk. If the program chooses to do nothing about the risk, the DA team and process

owner would escalate the findings.

3.4 Execute Activity

The fourth step in executing the DA process on a program is to perform the DA activity.

The DA execute activity sub-process includes the following key steps: (1) execute the DA

activity plan, and (2) subject matter experts work with functional and program personnel. Table 7

summarizes the key inputs, process steps, and outputs of the DA execute activity sub-process.

Table 7. Design Assurance Execute Activity Sub-Process

Inputs Key Steps of Process

Outputs

Design assurance

activity plan

Execute the design assurance

activity plan

Subject matter experts work with

functional and program personnel

Design assurance

results and findings,

including new risks,

issues, actions,

lessons learned, etc.

Revise design

assurance plan as

needed

DA activities will vary based upon the program’s risk profile and classes of risks on each

program. The following provides a general guideline for the different risk levels on technical,

process, or executability categories of risk. Note that the majority of DA activities are not related

to specific program defined reviews. The DA execution activity may be divided into two

categories: (1) design assessment tasks and (2) process compliance tasks.

Design assessment tasks are those that address the risks identified in the plan. Those risks may be

cost, schedule, or technical in nature. First, collect and review the related design materials. The

subject matter experts should engage and interact with program design working groups. Informal

discussions with design engineers, participation in the various risk boards, and attending formal

design reviews are means to engage with those working groups.

A ‘thread’ approach is key to identifying many issues and risks quickly. This approach is based

upon a thread both vertically and horizontally within the program structure. Vertical threads

include, but are not limited to, following a potential problem that may have been caused by a

previously performed process (usually at a higher architecture level), budget allocations, or

19

common source reuse. Horizontal threads include following potential problems by looking at

similarities between unit designs, common sub-assembly designs, shared assembly processes, and

requirement allocations. Many potential problems may be uncovered in this fashion and may be

instantly resolved by communicating with the effected design team(s).

If, in the course of investigation, a thread identifies a new potential risk or issue that may have

significant effect on program execution; the DA team may initiate an independent assessment

(i.e., a technical evaluation) of the design process. This risk or issue would be elevated to the

appropriate levels and would be done in coordination with program management.

Process compliance tasks are those that address the engineering process risks identified in the

program plan. The first step is to communicate to the process owner and users that a process

assessment is to be performed. Next, process documentation is collected and reviewed. Objective

evidence is assessed for compliance to the process documentation.

Any findings, corrective actions, lessons learned, etc., are collected for the last step of the

process.

3.5 Monitor/Report Findings

The fifth, and final, step in executing the DA process on a program is to monitor and report the

findings from the DA activities. At the conclusion of each DA activity, the results and corrective

actions, if any, will be documented and communicated to the program and functional

organizations as appropriate. For any activities identified as a risk, they will be monitored by the

DA team until they are removed.

The DA monitor/report findings sub-process includes the following key steps: (1) watch the DA

results and findings identified as areas of risk, (2) report the results and findings, and (3) escalate

findings to senior management. Table 8 summarizes the key inputs, process steps, and outputs of

the DA monitor/report findings sub-process.

20

Table 8. Design Assurance Monitor/Report Findings Sub-Process

Inputs Key Steps of Process

Outputs

Design assurance

results and findings,

including new risks,

issues, actions, etc.

Watch results and findings

identified as risk areas

Report results and findings

Escalate findings

Design assurance report

to programs,

engineering, process

owners, mission

assurance

Risks to risk

management process

Watch list items to

program mission

assurance and design

assurance process

owner

Lessons learned to

affected process owner

Feedback to design

assurance program plan

Results and findings discovered from the previous step must be monitored to assure they are

properly resolved. The program must decide whether or not it will act on the DA team output,

however, the DA team has a responsibility to verify and validate how each of the findings were

acted upon. If the DA team feels that a particular result that has significant risk has not been acted

on properly, the findings can be elevated within the organization.

Activity results could include a summary of the actions taken, the design teams participating,

and/or the design processes involved. Specific actions or corrections will be documented. This

may include reporting of design changes instituted as part of the DA activity or recommended

design changes. For recommended DA actions, the report will include the responsible integrated

product team on the program and the recommended actions with a due date commensurate with

program milestones.

The report is maintained by program mission assurance and any new risks which have been

identified in the DA process are to be inserted into the program risk list for monitoring and

tracking. The DA team will also communicate all the findings and corrective actions (completed

and pending) to the appropriate functional engineering and mission assurance organizations for

their specific actions and for incorporation into their respective lessons-learned databases.

21

4. Conclusions

This DAG provides a process for performing DA that uncovers design risks as early in the design

cycle as possible. The Guide can be used by any organization involved in the acquisition of a

space system. The Topic Team developed this Guide specifically to develop a definition of DA,

identify key DA enterprise attributes and program elements, and formulate a risk-based DA

process flow. From the beginning of this activity, it was clear that the scope of the Guide was

going to be focused on developing a process for performing DA and that many other aspects of

DA would need to be addressed by others at a later date. As such this Guide does not cover all

areas of DA and those areas that are covered can be developed in further detail. It is the hope of

the Topic Team that this content can be used as a starting point for the aerospace community and

will lead to further DA development.

During the development of the DAG, substantial comments and feedback have been provided and

incorporated. Appendix F captures some of the questions that were posed to the Topic Team,

along with responses to those questions. A number of useful references have been identified that

can be used to support DA activities, and are captured in Appendix G. Finally, Appendix H is a

glossary for some of the key terms used in the DAG.

22

23

Appendix A. Failures and Design Assurance

Recent Aerospace studies strongly suggest design issues account for 40% of all failures, far

exceeding other failure causes, which shows there are escapes with current design practices [3].

A 1997 Aerospace study shows design issues accounted for a much smaller number of on-orbit

failures [6]. The data in Figure A-1 shows design assurance anomalies accounting for 19% of the

on-orbit failures.

0 5 10 15 20 25 30 35 40 45

Workmanship

Part

Design

Unknown

Environment

Software

Operational

Orbital Failures (%)

All

Infant Mortality

Figure A-1. Past (1997) on-orbit failure causes.

The data in Figure A-1 show that on-orbit failures due to design issues have significantly

increased over the last 10 years when compared to recent studies.

Infant mortality shows that the problem manifested itself early in failure, within the first 90 days.

Examples of infant mortality design issues include deployment failure due to critical clearance,

inappropriate part usage allowed due to design allowance failure, unit failure due to lack of

design analysis, and inadequate testing.

24

Appendix B. Acquisition Life Cycle and Design Assurance

About three-quarters of the total system life cycle costs are based on decisions made before

Milestone A [7]. This means the decisions made in the pre-Milestone A period are critical to

avoiding or minimizing cost and schedule overruns later in the program. Design assurance is

predominantly performed in pre-systems acquisition, or done before Milestone B, and

consequently has a critical impact on the system life-cycle cost. Figure B-1 shows how design

assurance relates to the Defense Acquisition System [6].

High ability to

influence life

cycle cost (70

to 75% of cost

decisions

made)

Less ability to

influence life

cycle cost

(85% of cost

decisions

made)

Little ability to

influence life

cycle cost (90

to 95% of cost

decisions

made)

Minimum ability

to influence life

cycle cost (95%

of cost decisions

made)

Design Assurance

Figure B-1. Design assurance and the defense acquisition management system [8].

25

Appendix C. Design Assurance Enterprise Attributes/Capability

Checklist

This appendix lists the design assurance enterprise attributes identified by the team as being a key

to the implementation of design assurance at an enterprise level. This appendix can be used as a

program resource to ensure coverage of key enterprise attributes to the design, as a knowledge

resource, to better understand specific design assessment attributes, and/or a resource for

evaluating (or analyzing) how well a company is implementing the design assurance process at

any given point in time. By understanding the aspects detailed in the different maturity ratings, an

organization can better understand what specific actions to implement to improve and mitigate

design risk. Maturity levels are described for each attribute that range from an ideal

implementation to a more sporadic implementation of the design assurance process. As various

changes commonly occur on programs, assessments using the design assurance capability

checklist should be performed during the development life cycle to account for additional design

assurance risk areas. As design assurance processes become more mature, these enterprise

attributes can and should evolve as risk analysis and nonconformance and noncompliance trends

are fully understood.

Similar to the Capability Maturity Model

®

Integration definition of maturity level, “a maturity

level consists of related specific and generic practices for a predefined set of process areas that

improve the organization's overall performance. The maturity level of an organization provides a

way to predict an organization's performance in a given discipline or set of disciplines. A

maturity level is a defined evolutionary plateau for organizational process improvement. Each

maturity level matures an important subset of the organization's processes, preparing it to move to

the next maturity level. The maturity levels are measured by the achievement of the specific and

generic goals associated with each predefined set of process areas.” [9]

Generic goals for each of the five maturing levels are described in Table C-1. Note that each level

creates a foundation for ongoing process improvement. Rather than uniquely define these

maturity levels for design assurance, the maturity levels as defined in the Capability Maturity

Model

®

Integration: Guidelines for Process Integration and Product Improvement, Second

Edition, were adopted in part. These generic goals shall be supplemented with the specific goals

listed in the description of maturity levels for the design assurance enterprise attributes.

26

Table C-1. Generic Maturity Level Descriptions

Maturity

Level 1

Maturity Level 1: Initial

At maturity level 1, processes are usually ad hoc and chaotic. The organization usually does not provide a stable environment to support the

processes. Success in these organizations depends on the competence and heroics of the people in the organization and not on the use of proven

processes. In spite of this chaos, maturity level 1 organizations often produce products and services that work; however, they frequently exceed

their budgets and do not meet their schedule. Maturity level 1 organizations are characterized by a tendency to over commit, abandonment of

processes in a time of crisis, and an inability to repeat their successes.

Maturity

Level 2

Maturity Level 2: Managed

At maturity level 2, the projects of the organization have ensured that processes are planned and executed in accordance with policy; the projects

employ skilled people who have adequate resources to product controlled outputs; involve relevant stakeholders; are monitored, controlled, and

reviewed; and are evaluated for adherence to their process descriptions. The process discipline reflected by maturity level 2 helps to ensure that

existing practices are retained during times of stress. At maturity level 2, status of the work products and the delivery of services are visible to

management at defined points. Commitments are established among relevant stakeholders and are revised as needed. Work products are

appropriately controlled. The work products and services satisfy their specified process descriptions, standards, and procedures.

Maturity

Level 3

Maturity Level 3: Defined

At maturity level 3, processes are well characterized and understood and are described in standards, procedures, tools and methods. The

organization’s set of standard processes, which is the basis for maturity level 3 is established and improved over time. These standard processes

are used to establish consistency across the organization. Projects establish their defined processes by tailoring the organization's set of standard

processes according to tailoring guidelines. At maturity level 3, processes are managed more proactively using an understanding of the

interrelationships of the process activities and detailed measures of the process, its work products, and its services.

Maturity

Level 4

Maturity Level 4: Quantitatively Managed

At maturity level 4, the organization and projects establish quantitative objectives for quality and process performance and use them as criteria in

managing processes. Quantitative objectives are based on criteria in managing processes. Quantitative objectives are based on the needs of the

customer, end users, organization, and process implementers.

Maturity

Level 5

Maturity Level 5: Optimizing

At maturity level 5, an organization continually improves its processes based on a quantitative understanding of the common causes of variation

inherent in processes. Maturity level 5 focuses on continually improving process performance through incremental and innovative process and

technological improvements. Qualitative process improvement objectives for the organization are established, continually revised to reflect

changing business objectives, and used as criteria in managing process improvement. The effects of deployed process improvements are

measured and evaluated against the quantitative process improvement objectives. Both the defined processes and the organization's set of

standard processes are targets of measureable improvement activities.

27

The following 25 pages are a collection of 22 design assurance enterprise attributes. These enterprise

attributes have been separated into one of three categories: Design Assurance Process, Design

Engineering Tools, and Design Assurance Supplier Assessment. This list is not necessarily exhaustive

and may be evolved to add and/or delete enterprise attributes as deemed appropriate by any

organization. The risk impact is discussed and score, goals, and weighting columns are left to the

Guide user to tailor to their specific needs. Some of the more common terms used in the design

assurance enterprise attributes have been abbreviated and are:

DA: Design assurance

DE: Design engineering

IPT: Integrated product team

RAA: Responsibility, accountability, authority

SME: Subject matter expert

SE: System Engineering

28

Design Assurance Process – 1

Dedicated design subject matter experts network Applicable Phases of

Project /Life Cycle

Gate/Review

Score Goal Weight Comments/

Objective

Evidence

Definition: A network of subject matter experts (SME) that is used to support DA reviews, provide

input to lessons learned, and support development and modification of design guides. This network

is maintained through a certification process or other formal means.

Risk Impact: SMEs are a key component of the DA process. Lack of identified SMEs presents a

high risk to success of the effort.

Maturity Level 1 No SMEs have been officially identified.

Maturity Level 2 There are SMEs, but their use varies by organization.

Maturity Level 3 There is a network of SMEs, but there is not a centralized, accessible listing,

or they are kept by several organizations.

Maturity Level 4 A network that identifies subject matter experts exists, is readily accessible,

and is utilized. However, there is no certification process.

Maturity Level 5 A network that identifies subject matter experts exists, is readily accessible,

and is utilized. These experts have been certified through a standard

process and are recognized for their expertise. The SMEs have

responsibility to share their knowledge in a way that it can be effectively

leveraged throughout an organization.

29

Design Assurance Process – 2

Integrated and cross functional design organizational structure with clearly defined RAAs and overall

shared responsibilities and accountabilities for product throughout program life cycle

Applicable Phases of

Project /Life

Cycle/Gate /Review

Score Goal Weight Comments/

Objective

Evidence

Definition: Design groups on a program consist of team members from cross functional

organizations such as engineering (design, stress, etc.), manufacturing, tooling, materials and

processing, supplier management, quality, customer or mission assurance. Team members are

appropriately represented and share responsibility and accountability for overall product cost,

schedule, performance, and delivery.

Risk Impact: Having cross functional representation mitigates design for manufacturing, assembly,

and test issues and allows earlier preventive action.

Maturity Level 1 Design is done in series: Design hands off to stress, to manufacturing, etc

Maturity Level 2 Design is done concurrently (in parallel) but no shared responsibility and

accountability between the functions.

Maturity Level 3 Design organization is organized as an integrated product team (IPT) but

engineering holds all responsibility and accountability for the product, and

therefore holds all decision making power. Customers are included in

reviews only as required by contract.

Maturity Level 4 Design organization is organized as an IPT (on paper) but realistically

operates only as an integrated product team late in the program when

design and development are near complete. Customer review occurs at final

design. Prior to this, IPT is engineering centric.

Maturity Level 5 IPT operates as such throughout the program and product life cycle with

shared RAA for product design, cost, schedule etc. and decisions are made

as a team with customer review as needed.

30

Design Assurance Process – 3

Workforce capability and maturity Applicable Phases of

Project /Life

Cycle/Gate/ Review

Score Goal Weight Comments/

Objective

Evidence

Definition: Personnel supporting the DE, SE, etc., tasks have sufficient training to excel at their

respective duties. This includes the designers, analysts, systems engineers, quality engineers and

DA personnel as well as support functions that give inputs to design. These functions include

manufacturing, test, assembly, integration as well as materials and processes, quality, etc.

Risk Impact: All people need to be trained and have a training plan that is actively managed. RAA

agreements between the different DE and DA roles must be clearly defined. Clear RAAs for design

interfaces (physical part, process, tool) need to be fleshed out and agreed to by affected

organizations and executed by functional organizations and programs as required.

Maturity Level 1 Adequacy of training specific to job functions is in question. Only training

required for enterprise is tracked.

Maturity Level 2 All personnel are adequately trained in their specific duties. Little or no

training specific to the DA process.

Maturity Level 3 All personnel are adequately trained in their specific duties. DE training and

certification is timely (e.g., performer awareness, DA training, DE/DA

training, SE requirements training). Only the personnel directly supporting

DA are trained regarding the DA process.

Maturity Level 4 All personnel are adequately trained in their specific duties. Some personnel

are trained regarding the DA process sufficiently to support in multiple DA

tasks as required.

Maturity Level 5 All personnel are adequately trained in their specific duties. In addition, all

personnel are trained regarding the DA process sufficiently to support in

multiple DA tasks as required.

31

Design Assurance Process – 4

Lessons learned and significant risk mitigation actions continuously embedded into command media

and design guides.

Applicable Phases of

Project/Life

Cycle/Gate/ Review

Score Goal Weight Comments/

Objective

Evidence

Definition: The lessons learned process is “closed loop.” Not only is the data gathered and

disseminated by a database (or other means), the lessons learned are incorporated into the design

guides and other command media so that they become part of the way of doing business. Training

regarding the lessons learned is included in actively managed training plans.

Risk Impact: Incorporating lessons learned into design guides or other command media is the most

effective way to ensure that the information is used by the personnel performing the DE tasks.

Maturity Level 1 Only minimal thought is given to lessons learned when creating or updating

command media such as design guides.

Maturity Level 2 Lessons learned are considered when updating the command media (such

as design guides). The frequency of updates is not consistent.

Maturity Level 3 There is a formal process in place to incorporate lessons learned into

command media (such as design guides). This process may not be

performed consistently. Training and/or notification regarding this

documentation of lessons learned is not consistently flowed to users.

Maturity Level 4 There is a formal process in place to incorporate lessons learned into

command media (such as design guides). This process is performed

whenever a design guide or other command media is updated. Training

and/or notification regarding changes to documentation is not consistently

flowed to those affected.

Maturity Level 5 There is a formal and regular process in place to incorporate lessons

learned into command media (such as design guides). This process is

performed frequently to ensure that the lessons-learned information is

included in a timely fashion. The process includes flow down of new and

revised command media to all affected. People are aware of all process

changes prior to release by training and/or notification.

32

Design Assurance Process – 5

Process discipline - Actual practice consistent with approved, documented processes Applicable

Phases of

Project/Life

Cycle/Gate/

Review

Score Goal Weight Comments/

Objective

Evidence

Definition: The processes used by personnel supporting DE tasks are approved utilizing a formal process

or by a designated organization. These processes are readily accessible, and in a form that is utilized by

the personnel. This includes, but is not limited to the DA process.

Risk Impact: Having a documented set of processes within an organization/program is the key to process

commonality across a broader organization where feasible. When employees know the processes they are

responsible for and have documented the best practice of the process, they not only follow the process with

more discipline but they also will likely improve the process documents as warranted.

Maturity Level 1 Processes exist, but they may or may not be approved, accessible, utilized, or

updated in a consistent manner. Variation exists across functions and interfacing

engineering groups.

Maturity Level 2 Some processes used by personnel supporting DE tasks are approved utilizing a

formal process or by a designated organization. Approval is not always consistent

for all design related processes, process discipline varies or these processes may

not be readily accessible. Processes may be evaluated and updated as required to

support changes. Noncompliance to process e.g. design review entrance and exit

criteria, results in travelled work/risk. Travelled risk is recognized but not managed

as part of the risk process.

Maturity Level 3 All processes used by personnel supporting DE tasks are approved utilizing a

formal process or by a designated organization. These processes are readily

accessible, but may be too cumbersome to use by some personnel (i.e., perceived

as restrictive by programs) and hence, limited implementation. Process deviations

are continually used without documented processes evaluated and updated as

required to support changes and refinements in the operations. Travelled risk due

to process noncompliance is adequately managed within the overall risk

management process (e.g. open issues from design reviews).

Maturity Level 4 All processes used by personnel supporting DE tasks are approved utilizing a

formal process or by a designated organization. These processes are readily

accessible, but may not be utilized consistently by all personnel. Processes are

continually evaluated and updated as required to support changes and refinements

in the operations. Process compliance is the norm, so travelled risk due to process

noncompliance is minimized.

Maturity Level 5 All processes used by personnel supporting DE tasks are approved utilizing a

formal process or by a designated organization. These processes are readily

accessible, and in a form that is utilized by all personnel. Processes are continually

evaluated and updated as required to support changes and refinements in the

operations.

Culture of openness allows anyone in the organization to bring up

process noncompliances that jeopardize quality of design deliverables.

33

Design Assurance Process – 6

Robust nonconformance and noncompliance processes—Integrity of the problem identification,

containment actions, root cause(s) determination, systemic corrective actions, and corrective and

preventive action verification of effectiveness

Applicable Phases of

Project/Life

Cycle/Gate / Review

Score Goal Weight Comments/

Objective

Evidence

Definition: Evaluation of the effectiveness of the corrective action processes employed in the DE

effort. Includes metrics to measure the effectiveness. Allows feedback to improve the corrective

action process. Also includes robust and timely technical alert process that ensures all programs are

aware of technical issues that may affect their program and containment across all programs is

timely and thorough.

Risk Impact: Many engineering problems result in changes to engineering processes. There needs

to be a process, (e.g., plan, do, check, act or define, measure, analyze, improve, control) to verify

that the revised/new process was effective in solving the problems. If problems recur, this indicates

either a breakdown in corrective action or an incorrect root cause. For significant technical issues, an

alert process must be in place to ensure that other programs and organizations are made aware of

the issue and action is taken to mitigate recurrence.

Maturity Level 1 Engineering problems are mostly addressed with containment actions and

no effort towards root cause analysis and needed corrective action. Very

little focus on process. Focus is on fixing the product and moving it down the

manufacturing line.

Maturity Level 2 As a result of engineering issues, systemic fixes involving process changes

are identified but inconsistently executed. Technical issues are discussed

but there is no formal process to alert and track impact across other

programs or organizations. Repeat issues occur on other programs.

Maturity Level 3 As a result of engineering issues, corrective action involving process

changes or development are worked by the responsible

organization/program, implemented, but only seem to be executed on the

near term program. Process changes are not institutionalized across

programs and/or products.

Maturity Level 4 As a result of engineering issues, corrective action involving process

changes or development are worked as an integrated team by the functions

and programs that share the RAA. The corrective action is implemented but

only seem to be executed on the near term programs and does not

adequately capture new programs. Process changes are not

institutionalized across programs and/or products.

Maturity Level 5 When process changes are implemented to fix an engineering problem,

follow up is done to verify that the process change was effectively

implemented and no recurrence of problems is evident. If engineering

noncompliance and nonconformance continues to indicate that the process